Sensor Fusion Magic

If you're designing a humanoid robot, you need to understand why advanced sensor fusion is the secret sauce to making it move, sense, and interact like a pro.

By Wei-Li Cheng

Let’s start with the basics: sensor fusion. It’s the process of combining data from multiple sensors to create a more accurate, comprehensive understanding of the environment. Think of it as the robot’s way of seeing the world in HD instead of grainy, pixelated footage. But why does this matter for humanoid robots? Well, humanoid robots are designed to mimic human motion and behavior, and humans rely on a complex network of senses to move and interact with the world. So, if you want your robot to move like a human, it needs to process sensory information in a similar way.

Here’s where things get interesting. A single sensor, like a camera, can give your robot visual data, but it’s limited. It can’t tell the robot how far away an object is or how fast it’s moving. Now, add in a depth sensor, and suddenly your robot can gauge distance. Throw in accelerometers, gyroscopes, and force sensors, and now your robot can understand its own movement, balance, and even the texture of the surface it’s walking on. This is the magic of sensor fusion—it allows the robot to combine all these data streams into one cohesive picture of its surroundings.

Why Sensor Fusion is a Game-Changer

So, why is sensor fusion such a big deal for humanoid robots? For starters, it’s all about precision. When your robot has access to multiple data points, it can make more informed decisions. For example, if your robot is walking through a cluttered room, it needs to know not just where objects are, but also how to navigate around them without tripping. Sensor fusion allows the robot to process visual data, depth information, and balance cues all at once, ensuring it can move smoothly and avoid obstacles.

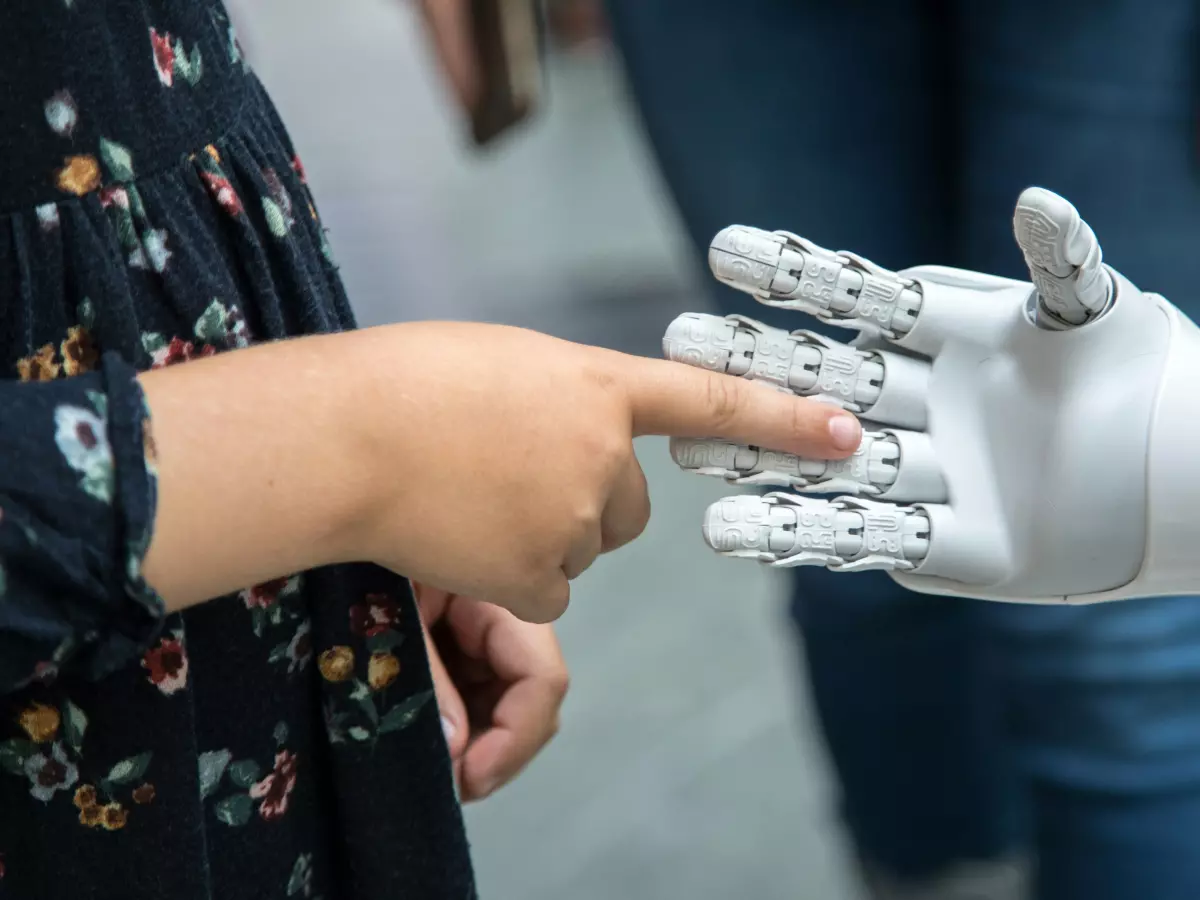

But it’s not just about movement. Sensor fusion also plays a huge role in interaction. Imagine a humanoid robot that’s designed to assist in a hospital. It needs to be able to recognize when a patient is reaching out for help, understand the force needed to gently assist them, and adjust its grip based on the patient’s strength. This level of interaction requires data from multiple sensors—cameras, pressure sensors, and even thermal sensors—to work together seamlessly. Without sensor fusion, the robot would be clumsy, slow, and potentially dangerous.

The Algorithms Behind the Magic

Of course, all this data would be useless without the right algorithms to process it. That’s where sensor fusion algorithms come into play. These algorithms take the raw data from each sensor and combine it in a way that makes sense. For example, if a camera detects an object, but the depth sensor says it’s farther away than it looks, the algorithm will reconcile these differences and give the robot a more accurate understanding of its environment. It’s like giving the robot a sixth sense, allowing it to make smarter decisions in real-time.

There are several types of sensor fusion algorithms, but one of the most common is the Kalman filter. This algorithm is particularly useful for humanoid robots because it can predict future states based on current data. So, if your robot is walking and suddenly encounters a slippery surface, the Kalman filter can predict how the robot’s balance will shift and adjust its movements accordingly. This predictive capability is crucial for humanoid robots, which need to react to changes in their environment as quickly as humans do.

Challenges in Sensor Fusion

Of course, sensor fusion isn’t without its challenges. One of the biggest issues is data synchronization. Each sensor operates at a different frequency, meaning the data they collect doesn’t always line up perfectly. If the camera captures an image at one moment, but the accelerometer records movement a split second later, the robot could end up with conflicting information. To solve this, engineers use techniques like time-stamping to ensure that all the data is aligned correctly.

Another challenge is data overload. With so many sensors collecting information simultaneously, the robot’s processor can get overwhelmed. This is where efficient algorithms and powerful processors come into play. Engineers are constantly working to optimize these systems so that robots can process all this data in real-time without lagging or overheating.

The Future of Humanoid Robots and Sensor Fusion

So, what’s next for humanoid robots and sensor fusion? As sensors become more advanced and algorithms more efficient, we can expect humanoid robots to become even more lifelike. Imagine a robot that can not only walk and talk like a human but also understand subtle social cues, like when someone is uncomfortable or when it’s time to step back. This level of interaction will only be possible with the continued advancement of sensor fusion technologies.

In the future, we might even see humanoid robots with adaptive sensor fusion, where the robot can prioritize certain sensors based on the task at hand. For example, if the robot is performing a delicate surgery, it might rely more on pressure and force sensors, while in a social setting, it might prioritize facial recognition and audio sensors. The possibilities are endless, and sensor fusion will be at the heart of it all.

So, if you’re working on a humanoid robot, don’t underestimate the power of sensor fusion. It’s the key to creating robots that can move, interact, and adapt like humans. And who knows? In a few years, we might be living in a world where humanoid robots are as common as smartphones, all thanks to the magic of sensor fusion.