Sensor Placement Dilemma

Humanoid robots are still clumsy, and the problem might be where we’re putting their sensors.

By Wei-Li Cheng

For all the hype around humanoid robots, they still trip over things, miss handshakes, and generally struggle to move like humans. Sure, they’ve come a long way, but there’s one fundamental issue that’s often overlooked: sensor placement. We’re so focused on cramming them with advanced algorithms and AI that we forget the importance of where those sensors are actually located. And that, my friends, could be the Achilles' heel of humanoid robots.

Let’s break it down. Sensors are the eyes, ears, and skin of a humanoid robot. They gather data from the environment, which the robot’s motion control algorithms then process to make decisions. But here’s the catch: if those sensors aren’t placed in the right spots, all the fancy algorithms in the world won’t help. Imagine trying to drive a car with a rearview mirror mounted on the floor. Yeah, not ideal.

Why Sensor Placement Matters

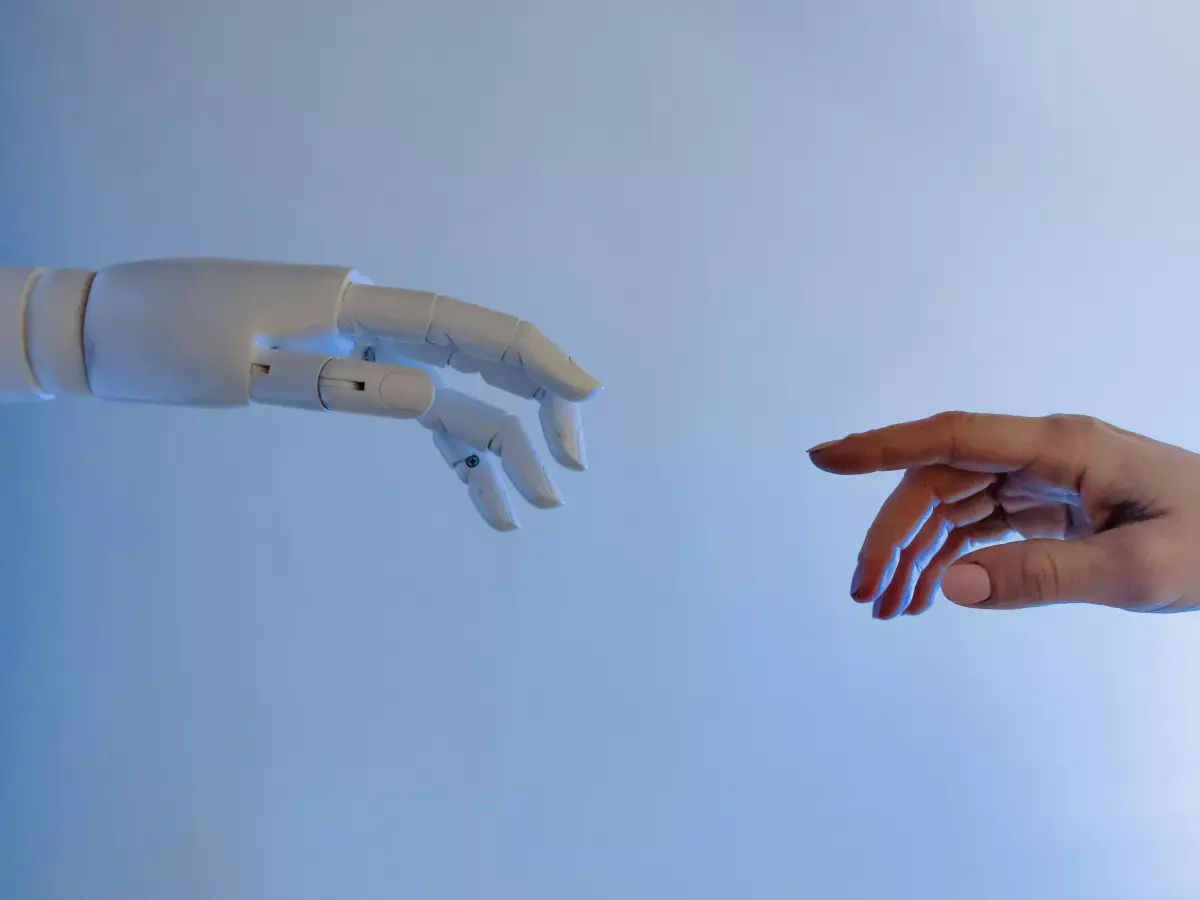

Think about it: humans have evolved over millions of years to have sensors (our eyes, ears, skin, etc.) in very specific locations. Our eyes are at the front of our heads, giving us a wide field of view. Our ears are on the sides, helping us detect sound direction. Our skin is everywhere, providing tactile feedback. Now, imagine if our eyes were on our elbows. We’d be bumping into things constantly. The same principle applies to humanoid robots.

When sensors are poorly placed, the robot’s ability to interact with its environment is compromised. For example, if a robot’s vision sensors are too low, it might not be able to detect obstacles at head height. If tactile sensors are only on its hands, it won’t be able to feel when it bumps into something with its torso. This can lead to clumsy movements, poor decision-making, and even dangerous situations.

The Trade-Off: Precision vs. Flexibility

Here’s where things get tricky. In theory, you could just slap sensors all over a humanoid robot and call it a day, right? Not so fast. More sensors mean more data, and more data means more processing power is needed. This creates a trade-off between precision and flexibility. You want the robot to be precise in its movements, but you also want it to be flexible enough to adapt to different environments.

For example, a robot designed for factory work might have sensors optimized for precision tasks like picking up small objects. But that same robot might struggle in a home environment, where it needs to navigate furniture, pets, and unpredictable human behavior. On the flip side, a robot designed for home use might be great at avoiding obstacles but lack the precision needed for delicate tasks.

Motion Control Algorithms: The Unsung Heroes

Of course, sensor placement is only half the battle. Once the sensors gather data, the robot’s motion control algorithms have to make sense of it all. These algorithms are responsible for turning raw sensor data into actionable movements. But here’s the thing: even the best algorithms can’t compensate for bad sensor placement.

Let’s say a robot’s vision sensors are mounted too low, and it can’t see an obstacle in its path. The motion control algorithm might tell the robot to keep walking forward, completely unaware of the obstacle. The result? A faceplant. On the other hand, if the sensors are well-placed, the algorithm can make smarter decisions, like stopping or stepping around the obstacle.

Real-World Applications

So, how does all this play out in the real world? Let’s look at two different types of humanoid robots: one designed for industrial use and one designed for home assistance.

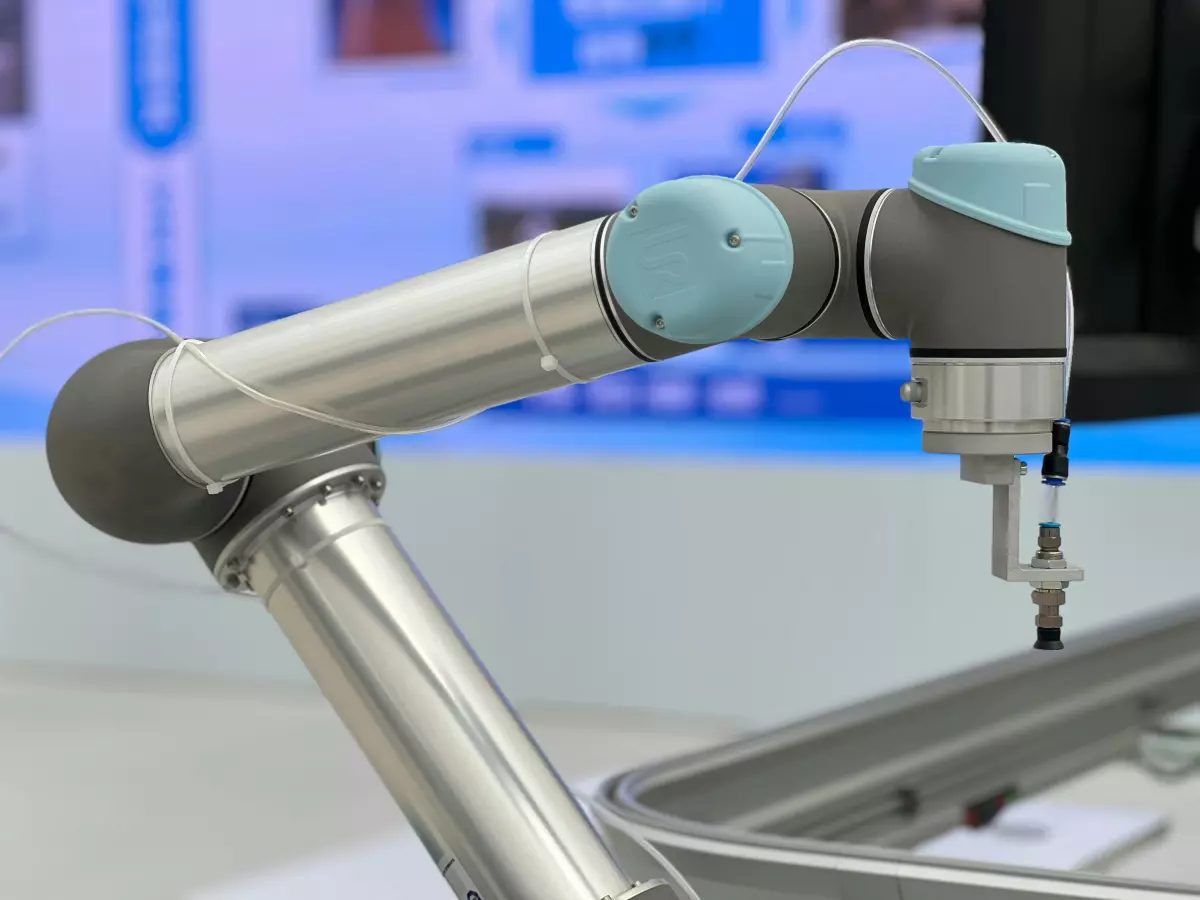

In an industrial setting, precision is key. The robot needs to perform repetitive tasks with high accuracy, like assembling small parts or welding. In this case, sensors are often placed close to the hands and arms, where the work is happening. The robot doesn’t need to worry about navigating a complex environment, so fewer sensors are needed for obstacle detection.

Now, let’s switch gears to a home assistant robot. This robot needs to be flexible enough to navigate a constantly changing environment. It needs sensors all over its body to detect obstacles, people, and pets. But it also needs to be precise enough to perform tasks like picking up objects or opening doors. This is where the trade-off between precision and flexibility becomes crucial.

The Future of Sensor Placement

As humanoid robots become more advanced, we’ll likely see more sophisticated sensor placement strategies. Instead of just slapping sensors wherever there’s space, designers will need to think carefully about where those sensors will be most effective. This could involve mimicking human sensor placement more closely or developing entirely new strategies that take advantage of the robot’s unique capabilities.

One exciting possibility is the use of modular sensor systems. These systems would allow robots to adapt their sensor placement based on the task at hand. For example, a robot could have sensors on its arms for precision tasks and then switch to sensors on its torso for obstacle detection when navigating a crowded room.

In the end, the key to unlocking the full potential of humanoid robots might not be in more advanced algorithms or AI but in something as simple as where we put their sensors.