Staying Upright

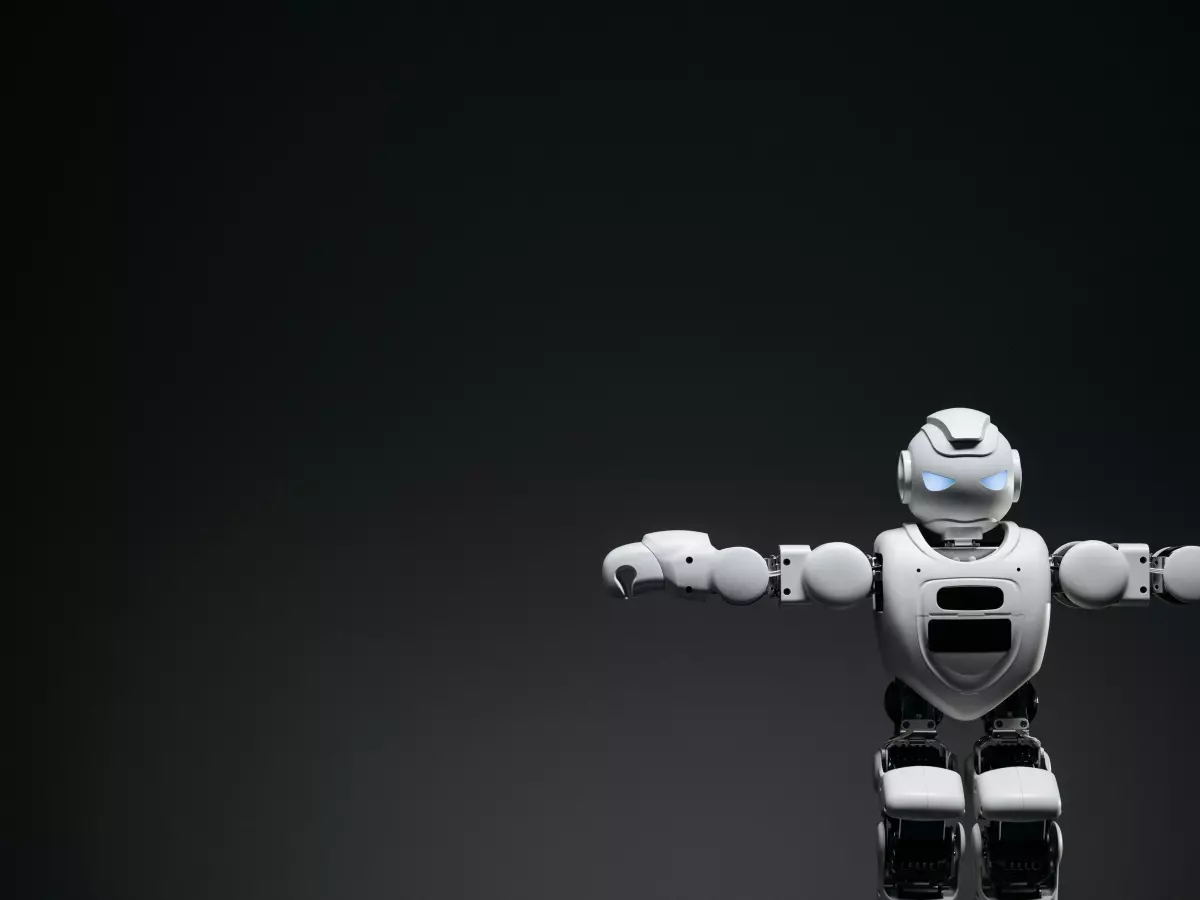

"Wait, how does it not fall over?" That's the question everyone asks when they see a humanoid robot take its first steps.

By Alex Rivera

It’s a fair question. After all, walking upright is something humans have been perfecting for millions of years, and we still trip over our own feet. So, how do humanoid robots—machines with no biological muscles, bones, or inner ears—manage to stay upright, let alone walk? The answer lies in a mix of clever design, advanced sensor integration, and some mind-bending algorithms that control balance.

Let’s start with a fun fact: the average human takes about 10,000 steps a day, and we rarely think about the complex balancing act happening with each one. For humanoid robots, every step is a calculated risk, and balance control is the unsung hero that keeps them from face-planting.

Humanoid robots are designed to mimic human motion, but they don’t have the luxury of a brain that can process balance instinctively. Instead, they rely on a combination of sensors, actuators, and algorithms to maintain stability. The real magic happens when these elements work together to create something called dynamic balance control.

Dynamic Balance: The Robot's Secret Weapon

Dynamic balance is the ability to maintain stability while in motion. For humans, this comes naturally. For robots, it’s a high-wire act. Imagine walking on a tightrope while juggling—every small movement has to be countered by another to keep from falling. That’s essentially what humanoid robots are doing with every step.

To achieve this, robots use a combination of sensors like gyroscopes, accelerometers, and force sensors. Gyroscopes help the robot understand its orientation in space, while accelerometers measure changes in velocity. Force sensors, usually placed in the robot’s feet, detect how much pressure is being applied to the ground. Together, these sensors provide real-time data that the robot’s control algorithms use to make split-second adjustments.

But here’s the kicker: it’s not just about reacting to balance shifts. The best humanoid robots can predict when they’re about to lose balance and adjust before it happens. This is where predictive algorithms come into play. These algorithms use data from the robot’s sensors to forecast future movements and adjust the robot’s posture accordingly. It’s like having a sixth sense for balance.

Algorithms That Think Ahead

At the heart of balance control are motion control algorithms. These algorithms are designed to process the flood of data coming from the robot’s sensors and make real-time decisions about how to move. But not all algorithms are created equal. The most advanced ones use something called model predictive control (MPC).

MPC is a type of algorithm that predicts future states based on current data. In the case of humanoid robots, it predicts how the robot’s body will move in response to various forces, like gravity or an uneven surface. The algorithm then calculates the best way to adjust the robot’s joints and actuators to maintain balance. It’s like playing chess with physics—always thinking a few moves ahead.

Another key player in balance control is the zero moment point (ZMP) algorithm. ZMP is a concept that refers to the point on the ground where the robot’s total force is concentrated. If the ZMP falls outside of the robot’s base of support (usually the feet), the robot will tip over. The ZMP algorithm constantly monitors this point and adjusts the robot’s posture to keep it within the safe zone.

Why Balance Control is So Hard

So, if we’ve got all these fancy sensors and algorithms, why is balance control still such a challenge for humanoid robots? The answer lies in the complexity of human motion. Humans don’t just walk in straight lines on flat surfaces. We navigate stairs, uneven terrain, and sudden obstacles—all while maintaining balance.

For robots, replicating this level of adaptability is incredibly difficult. Every surface presents a new challenge, and the robot’s sensors and algorithms have to be finely tuned to handle it. Even small changes in surface texture or incline can throw off a robot’s balance if it’s not prepared. That’s why researchers are constantly working to improve sensor accuracy and algorithm efficiency.

Another challenge is the sheer amount of data that needs to be processed in real-time. The robot’s brain (usually a powerful onboard computer) has to process data from multiple sensors, run complex algorithms, and send commands to the actuators—all within milliseconds. Any delay could result in a fall.

The Future of Balance Control

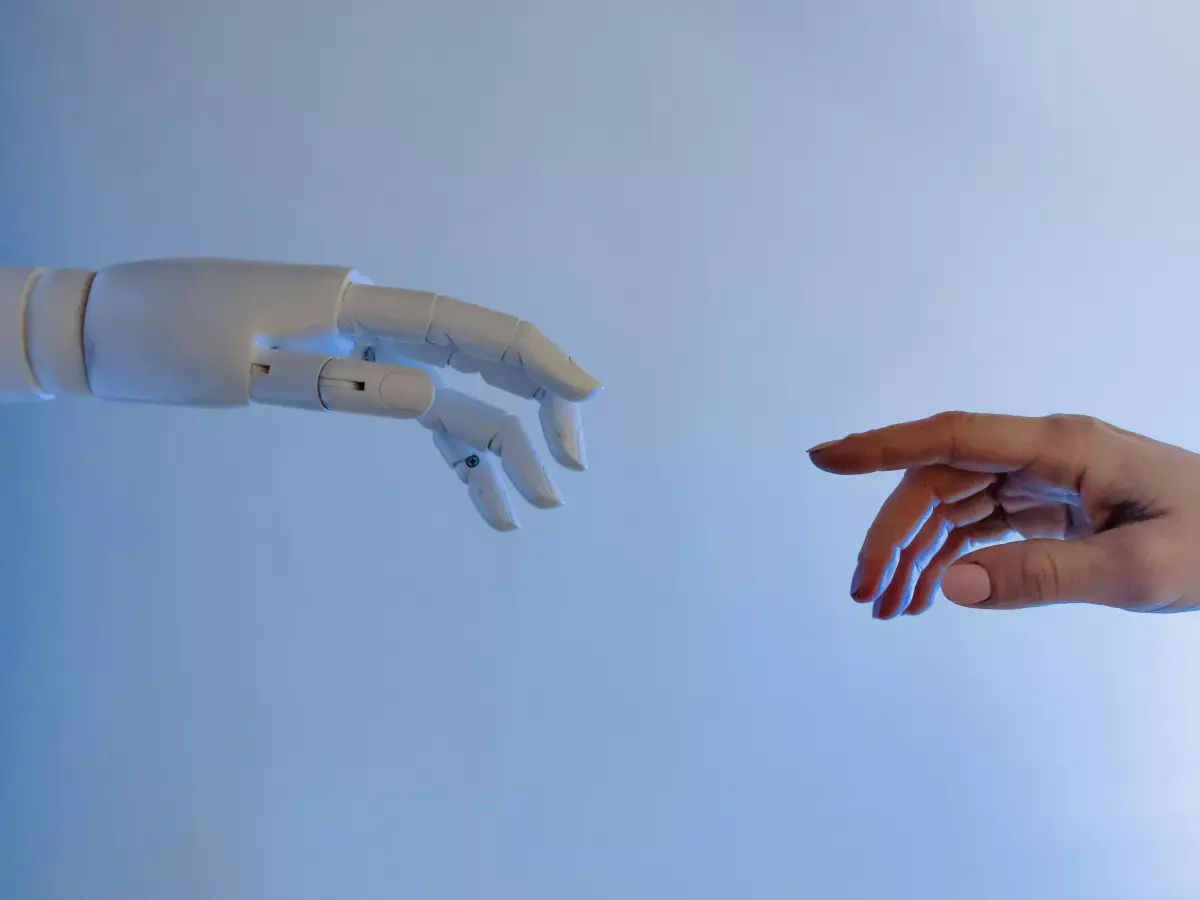

Despite the challenges, humanoid robots are getting better at balance control every year. Advances in AI and machine learning are helping robots learn from their mistakes and improve their balance over time. In the future, we may see robots that can navigate complex environments with the same ease as humans.

One promising area of research is the use of deep reinforcement learning, a type of AI that allows robots to learn from trial and error. By simulating thousands of walking scenarios in a virtual environment, robots can practice balancing without the risk of falling. Once they’ve mastered the virtual world, they can apply those skills in the real world.

So, the next time you see a humanoid robot walking around without falling over, remember that there’s a lot more going on behind the scenes than meets the eye. Balance control is one of the most complex challenges in robotics, but thanks to advances in sensors, algorithms, and AI, humanoid robots are getting closer to mastering it.