Coordination Unleashed

Did you know that humanoid robots can now achieve up to 95% accuracy in coordinated tasks like walking, grasping, and even dancing? That's right—robots are getting closer to moving like us, but the secret sauce lies in how they synchronize their sensors and motion control algorithms.

By Nina Schmidt

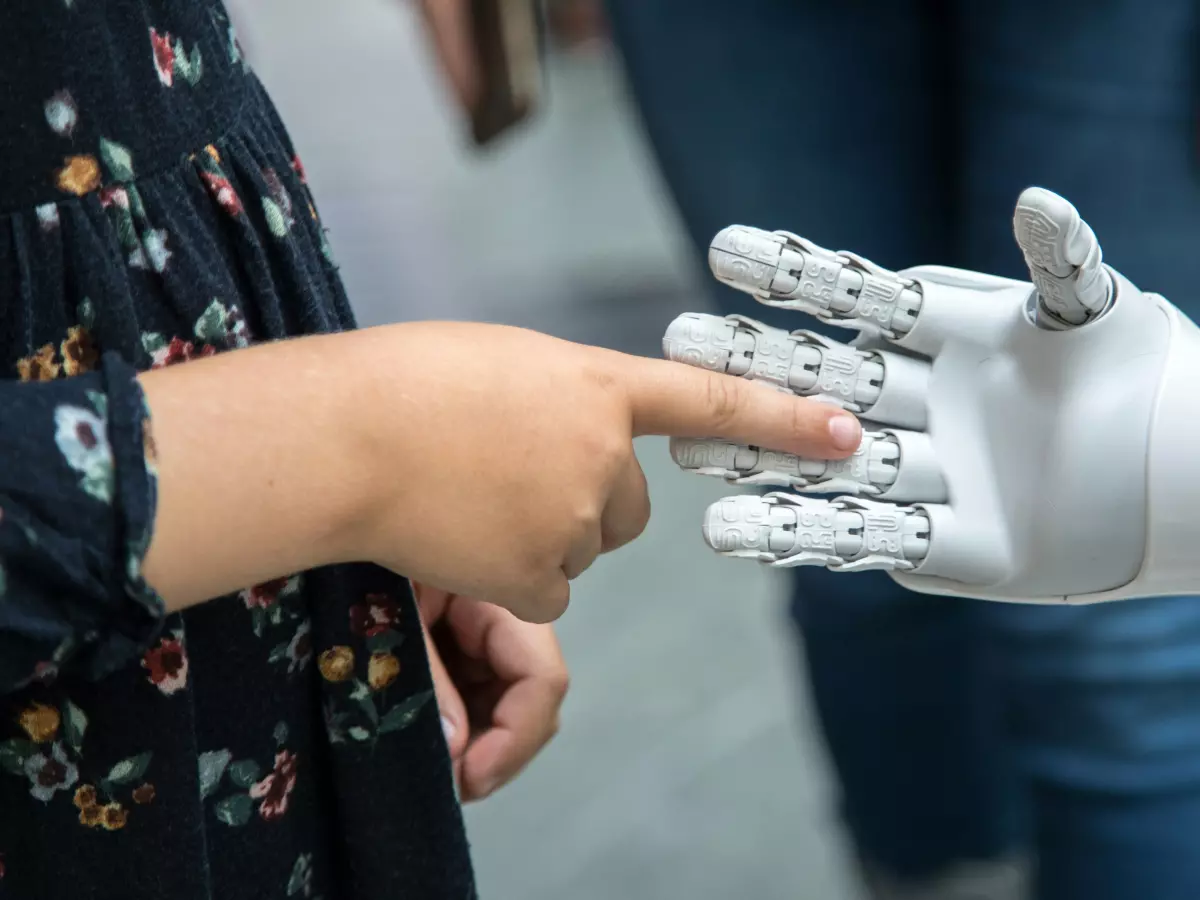

Humanoid robots are no longer just clunky machines that stumble around like toddlers learning to walk. Thanks to advances in sensor integration and motion control algorithms, these robots are mastering the art of coordination. But how exactly do they do it? And why is coordination such a big deal in the world of robotics?

Let’s break it down. Coordination in humanoid robots refers to the seamless interaction between various sensors and actuators (the robot's 'muscles') to perform complex tasks. Think about it: when you reach for a cup of coffee, your brain processes information from your eyes, muscles, and joints to ensure you don’t knock it over. Similarly, robots need to process data from multiple sensors—like cameras, gyroscopes, and pressure sensors—and then execute precise movements through their actuators. Easy, right? Well, not quite.

Why Coordination Matters

In the world of robotics, coordination is everything. Without it, humanoid robots would be no better than glorified remote-controlled toys. Coordination allows robots to perform tasks that require precision, balance, and timing—whether it’s walking up a flight of stairs or playing a game of ping-pong. But achieving this level of coordination is no small feat.

One of the biggest challenges in humanoid robot design is synchronizing the data from various sensors in real-time. Imagine trying to juggle while blindfolded, with someone shouting instructions at you in a foreign language. That’s what it’s like for a robot trying to process conflicting data from multiple sensors. If the sensors aren’t perfectly aligned, the robot’s movements will be jerky and imprecise.

To solve this, engineers use advanced algorithms that fuse data from different sensors and translate it into smooth, coordinated movements. These algorithms are designed to mimic the way the human brain processes sensory information, allowing robots to react to their environment in real-time.

The Role of Sensor Fusion

Sensor fusion is the magic that makes coordination possible. By combining data from multiple sensors—such as cameras, accelerometers, and gyroscopes—robots can create a more accurate picture of their surroundings. This allows them to make better decisions about how to move and interact with objects.

For example, a humanoid robot might use a camera to identify an object, while its gyroscope helps it maintain balance as it reaches for the object. Meanwhile, pressure sensors in its fingers ensure it grasps the object with the right amount of force. All of this happens in a fraction of a second, thanks to sensor fusion.

But sensor fusion isn’t just about combining data. It’s also about filtering out noise and inconsistencies. Just like how your brain ignores the sound of background chatter when you’re focused on a conversation, robots need to filter out irrelevant data to make accurate decisions. This is where machine learning comes into play, allowing robots to improve their coordination over time by learning from their mistakes.

Motion Control Algorithms: The Brain Behind the Brawn

Of course, sensors are only half of the equation. The other half is motion control algorithms, which are responsible for translating sensor data into physical actions. These algorithms are like the brain of the robot, telling its actuators how to move in response to the data they receive.

There are several types of motion control algorithms, but the most advanced ones are designed to mimic human motor control. These algorithms take into account factors like speed, force, and trajectory to ensure the robot’s movements are smooth and natural. They also allow robots to adapt to changes in their environment, such as obstacles or uneven surfaces.

For example, if a robot is walking on a slippery surface, its motion control algorithm will adjust its gait to prevent it from slipping. Similarly, if a robot is carrying a heavy object, the algorithm will adjust the robot’s posture to maintain balance.

Looking Ahead: The Future of Humanoid Robot Coordination

As sensor technology and motion control algorithms continue to evolve, humanoid robots will become even more coordinated and capable. In the future, we can expect robots that can perform tasks with the same level of precision and dexterity as humans—if not better.

Imagine a world where robots can perform complex surgeries, assist in disaster relief efforts, or even serve as personal assistants. The possibilities are endless, but it all starts with mastering coordination.

So, the next time you see a humanoid robot walking, dancing, or even playing a musical instrument, remember: it’s not just about the hardware. It’s the intricate coordination between sensors and motion control algorithms that makes it all possible.