Robot Vision

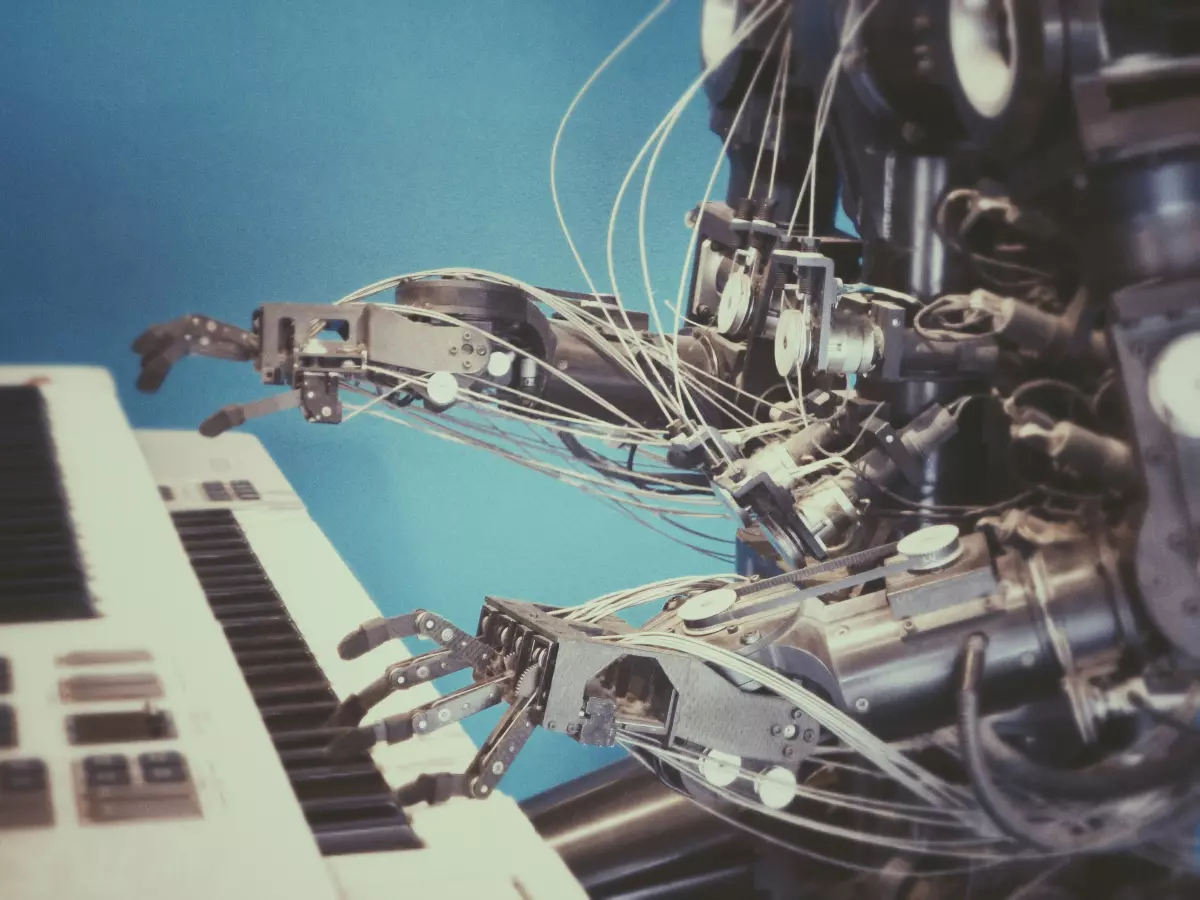

"Wait, so how does it actually 'see' things?" My friend asked, staring at the robot arm moving with precision across the table.

By Sarah Kim

It’s a question I get a lot. People are fascinated by robots, but the idea that these machines can ‘see’ and interact with the world is still mind-blowing to most. And honestly, it should be! Robot vision is one of the most complex and exciting areas of robotics, blending hardware, software, and a dash of artificial intelligence to give machines the ability to perceive their surroundings.

So, how do robots actually 'see'? Let's break it down.

The Basics of Robot Vision

First things first, robots don't ‘see’ the way we do. They don’t have eyes that send signals to a brain. Instead, they rely on a combination of sensors, cameras, and software to interpret their environment. The process is called robot perception, and it's a critical part of making autonomous systems work.

At its core, robot vision involves capturing data from the environment (through cameras or sensors), processing that data, and then making decisions based on what’s been ‘seen.’ But the devil is in the details, and there are a lot of moving parts—literally and figuratively.

Hardware: The Eyes of the Robot

Let’s start with the hardware. The most common ‘eyes’ for robots are cameras, but not just any cameras. Robots use specialized cameras like stereo vision cameras, LiDAR (Light Detection and Ranging), and even infrared sensors to capture a detailed view of their surroundings.

Stereo vision cameras work similarly to human eyes. They capture two images from slightly different angles, and by comparing these images, the robot can calculate depth and distance. This is crucial for tasks like object manipulation, where knowing how far away something is can make or break the operation.

LiDAR, on the other hand, uses lasers to measure distances. It sends out laser pulses and measures how long it takes for them to bounce back. This creates a 3D map of the environment, which is especially useful for navigation in autonomous vehicles or drones.

Then there are infrared sensors, which detect heat signatures. These are often used in environments where visibility is low, like in search and rescue missions or industrial settings with lots of smoke or dust.

Software: The Brain Behind the Eyes

Once the hardware captures the data, it’s up to the software to make sense of it. This is where things get really interesting. Robots use a combination of algorithms and machine learning models to process the visual data and make decisions.

One of the most common techniques is image processing, where the software analyzes the pixels in an image to identify objects, edges, and patterns. This is often combined with object recognition, where the robot is trained to recognize specific objects (like a cup or a door) based on its shape, size, and color.

But it doesn’t stop there. Robots also need to understand the context of what they’re seeing. For example, a robot vacuum needs to know the difference between a chair leg and a wall, and it needs to decide whether to go around or under the chair. This requires more advanced algorithms that can interpret the relationships between objects in the environment.

Machine Learning: Teaching Robots to See

In recent years, machine learning has taken robot vision to the next level. Instead of relying solely on pre-programmed rules, robots can now learn from experience. This is done through techniques like convolutional neural networks (CNNs), which are modeled after the human brain’s visual cortex.

CNNs allow robots to recognize objects with a high degree of accuracy, even in complex environments. For example, a robot in a warehouse might be able to identify different types of packages, even if they’re stacked on top of each other or partially obscured.

Machine learning also helps robots improve over time. The more data they process, the better they get at recognizing objects and making decisions. This is crucial for autonomous systems that need to operate in unpredictable environments, like self-driving cars or delivery drones.

Challenges in Robot Vision

Of course, robot vision isn’t perfect. One of the biggest challenges is dealing with uncertainty. Unlike humans, who can quickly adapt to changes in lighting or perspective, robots can struggle when the environment doesn’t match their training data.

For example, a robot trained to recognize objects in a well-lit room might have trouble in low-light conditions. Similarly, a robot that’s used to seeing objects from a certain angle might get confused if the object is rotated or partially hidden.

Another challenge is real-time processing. Robots need to process visual data quickly, especially in fast-paced environments like manufacturing or autonomous driving. This requires a lot of computational power, and even the most advanced systems can experience lag or delays.

The Future of Robot Vision

So, what’s next for robot vision? One exciting area of research is multi-modal perception, where robots combine data from multiple sensors (like cameras, LiDAR, and infrared) to get a more complete picture of their environment. This could help robots operate more effectively in complex or unpredictable settings.

Another promising development is the use of edge computing, where robots process data locally instead of relying on cloud servers. This could reduce latency and make real-time decision-making more efficient.

Ultimately, the goal is to create robots that can ‘see’ and understand the world as well as—or even better than—humans. And while we’re not quite there yet, the progress being made in robot vision is nothing short of amazing.

So, the next time you see a robot navigating its way through a room or picking up an object with precision, just remember: it’s not magic. It’s a combination of cutting-edge hardware, sophisticated software, and a whole lot of brainpower.