Perception Revolution

Did you know that over 90% of a robot's decision-making process relies on its perception system? Without it, robots would be blind to their surroundings.

By Alex Rivera

In the fast-evolving world of robotics, perception systems are the unsung heroes. While we often marvel at the dexterity of robotic arms or the precision of their movements, it's the perception systems that allow robots to understand and interact with their environment. These systems are the eyes, ears, and even 'feelers' of robots, enabling them to make sense of the world around them. But here's the kicker—robot perception systems are evolving at a breakneck pace, and it's changing the game for autonomous systems.

So, what exactly is a robot perception system? In simple terms, it's the combination of hardware and software that allows a robot to gather information from its environment, process that data, and make decisions based on it. Think of it as the robot's sensory organs combined with its brain. And just like humans, robots rely heavily on these systems to perform tasks effectively. From navigating complex environments to identifying objects, perception systems are the backbone of autonomy.

But here's the twist: robot perception systems are no longer just about 'seeing' the world. They're about understanding it. And that shift is driving some of the most exciting advancements in robotics today.

The Evolution of Perception Systems

In the early days of robotics, perception systems were relatively simple. Robots could 'see' using basic cameras or sensors, but their ability to interpret that data was limited. They could detect obstacles, but they couldn't tell you what those obstacles were. Fast forward to today, and we're seeing robots equipped with advanced sensors, AI-driven software, and machine learning algorithms that allow them to not only detect objects but also understand their context.

Take LiDAR, for example. This technology, which uses laser pulses to create 3D maps of a robot's surroundings, has become a game-changer in autonomous systems. LiDAR allows robots to 'see' in 3D, giving them a more detailed understanding of their environment. But it's not just about seeing—it's about interpreting. Modern perception systems use AI to analyze the data from sensors like LiDAR, allowing robots to recognize objects, predict movements, and even anticipate potential hazards.

And it's not just about vision. Robots are now equipped with a range of sensors that mimic human senses. Tactile sensors allow robots to 'feel' objects, while microphones enable them to 'hear' sounds in their environment. Combined with AI, these multi-sensory inputs allow robots to build a more complete picture of the world around them, leading to smarter, more autonomous decision-making.

Why Perception Matters

So, why is all of this important? Well, perception systems are what make autonomy possible. Without them, robots would be little more than pre-programmed machines, capable of performing only a limited set of tasks in controlled environments. But with advanced perception systems, robots can operate in dynamic, unpredictable environments, making real-time decisions based on the data they gather.

Imagine a robot navigating a busy warehouse. Without perception systems, it would need to follow a pre-defined path, unable to adapt to changes in its environment. But with advanced perception, that same robot can detect obstacles, identify objects, and even predict the movements of people or other robots, allowing it to navigate safely and efficiently.

In industries like manufacturing, logistics, and healthcare, this level of autonomy is a game-changer. Robots can now perform tasks that were once thought to be too complex or dangerous, all thanks to their ability to perceive and understand their surroundings.

The Role of AI in Perception

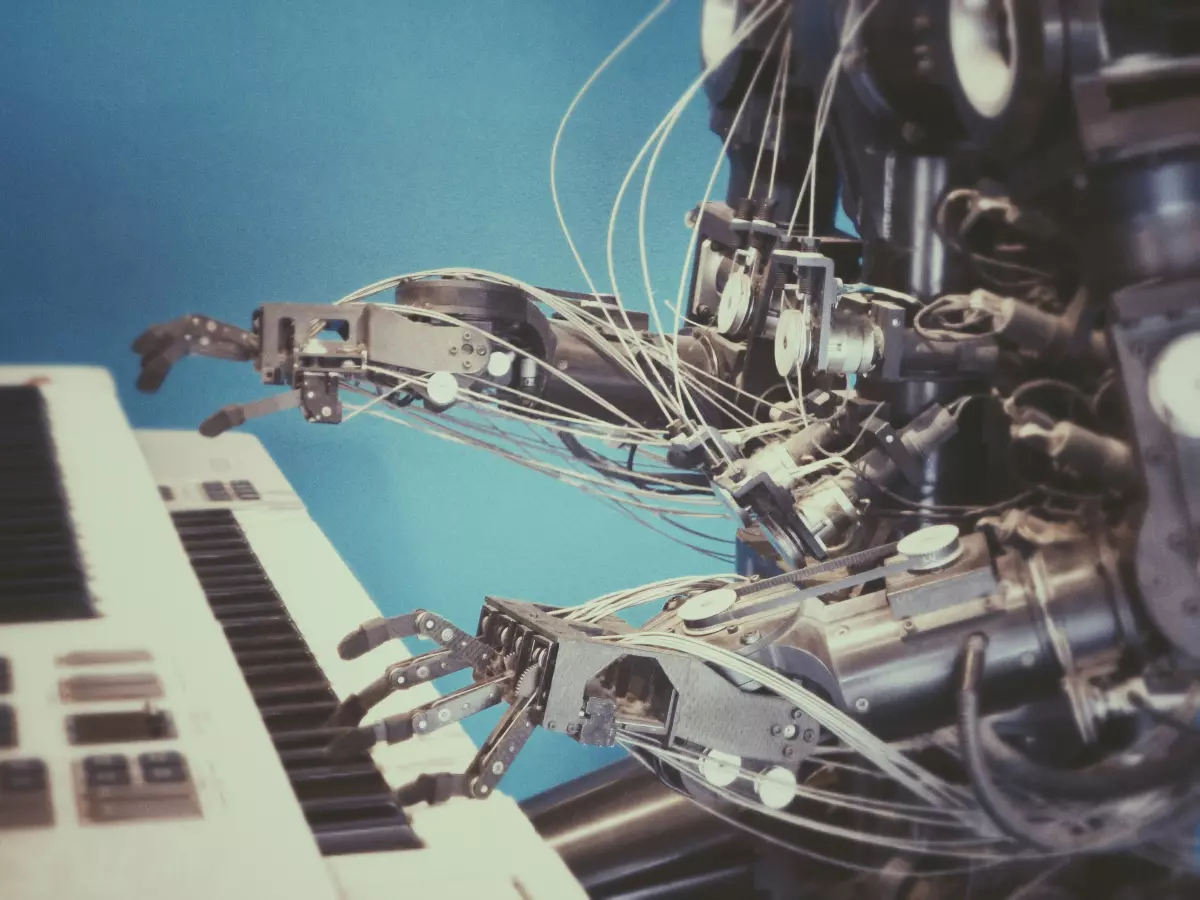

At the heart of modern perception systems is AI. Machine learning algorithms allow robots to process vast amounts of data from their sensors and make sense of it in real-time. This is crucial for tasks like object recognition, where a robot needs to identify and classify objects in its environment. But AI goes beyond just recognition—it enables robots to learn from their experiences.

For example, a robot equipped with AI-driven perception can learn to recognize new objects over time. It can also improve its decision-making abilities by analyzing past experiences and adjusting its behavior accordingly. This kind of learning is what separates modern robots from their predecessors, allowing them to adapt to new environments and tasks without the need for constant reprogramming.

But AI isn't just about making robots smarter—it's also about making them safer. In industries like healthcare, where robots are increasingly being used to assist with surgeries or care for patients, safety is paramount. AI-driven perception systems allow robots to detect potential hazards and respond to them in real-time, reducing the risk of accidents or errors.

What's Next for Robot Perception?

As perception systems continue to evolve, we're likely to see even more exciting advancements in the world of robotics. One area that's generating a lot of buzz is the integration of perception with other emerging technologies, like 5G and edge computing. These technologies will allow robots to process data faster and more efficiently, enabling even more complex autonomous behaviors.

Another area to watch is the development of more advanced multi-sensory perception systems. While current robots can 'see' and 'feel,' future systems may incorporate even more senses, like smell or taste, allowing robots to interact with their environment in ways we can't even imagine yet.

Ultimately, the future of robot perception is about creating machines that can not only understand their environment but also interact with it in meaningful ways. As these systems continue to improve, we're likely to see robots playing an even bigger role in industries ranging from manufacturing to healthcare to everyday life.

So, the next time you see a robot performing a complex task, remember—it's not just about the hardware or the software. It's about perception. And that's where the real revolution is happening.