Emotional Intelligence

Humanoid robots will never truly understand human emotions. Or will they?

By Kevin Lee

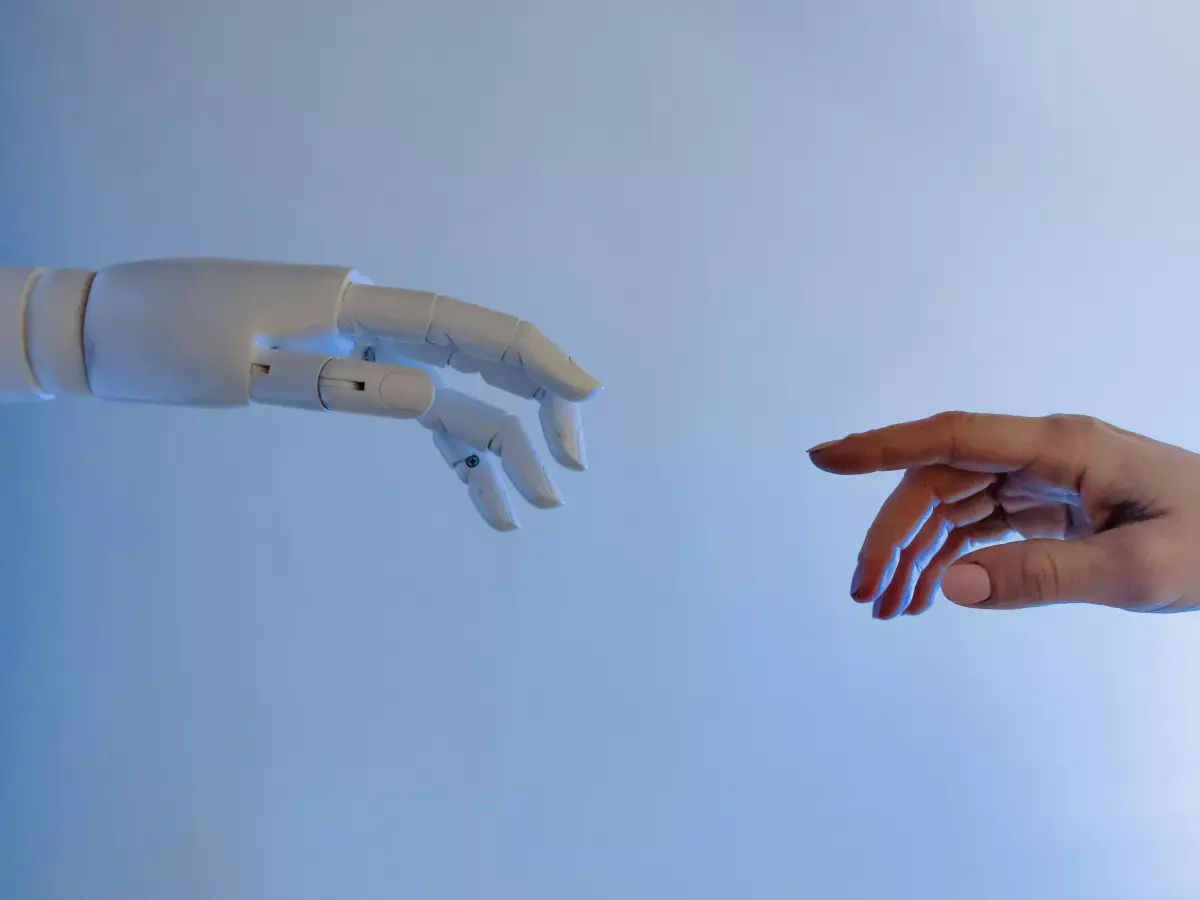

For years, the idea of humanoid robots understanding and responding to human emotions has been the stuff of science fiction. But what if I told you that the future is now? Engineers and researchers are working tirelessly to give robots something that seems inherently human: emotional intelligence. And no, this isn’t just about making robots smile when you smile. We’re talking about robots that can detect, interpret, and even respond to complex emotional cues.

But how do you design a machine to understand something as abstract and nuanced as human emotion? It all starts with the design of the robot itself. The physical appearance, sensor integration, and motion control algorithms all play a role in creating a robot that can not only mimic human behavior but also respond to it in emotionally intelligent ways.

Designing for Emotional Intelligence

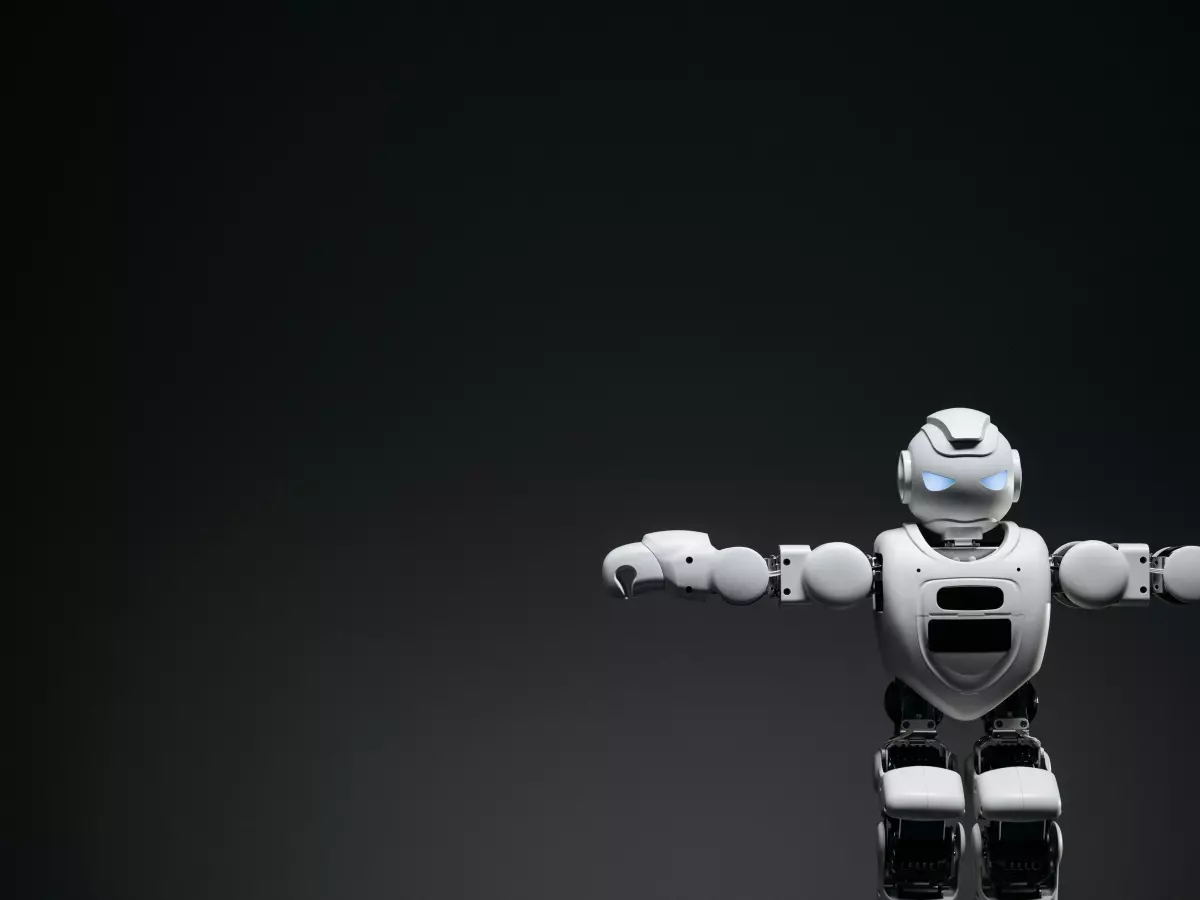

Let’s get one thing straight: humanoid robots don’t need to look human to understand emotions. But their design does need to be optimized for interaction with humans. This means incorporating facial expressions, body language, and even vocal tone into their design. The goal isn’t to create a robot that looks exactly like a human but one that can communicate in a way that humans naturally understand.

Take facial expressions, for example. A humanoid robot designed to recognize emotions will likely have a face equipped with sensors to detect changes in your facial muscles. These sensors feed data into algorithms that interpret whether you’re happy, sad, angry, or confused. But it’s not just about recognizing emotions; the robot also needs to respond appropriately. This is where motion control algorithms come into play. These algorithms dictate how the robot reacts physically—whether it mirrors your smile or offers a comforting gesture when you seem upset.

The Role of Sensors

Now, let’s talk sensors. For a humanoid robot to understand emotions, it needs to gather data from multiple sources. This is where sensor integration becomes crucial. Cameras, microphones, and even touch sensors all work together to provide the robot with a comprehensive understanding of its environment and the people in it.

For instance, a camera might capture your facial expressions, while a microphone picks up on the tone of your voice. Touch sensors could detect if you’re shaking or fidgeting, which might indicate nervousness or anxiety. All of this data is then processed by the robot’s algorithms to determine your emotional state. It’s like sensor fusion on steroids, with the goal of understanding not just what you’re doing but how you’re feeling.

Motion Control Algorithms: The Emotional Response

So, the robot has gathered all this data about your emotional state. Now what? This is where motion control algorithms come into play. These algorithms are designed to translate the robot’s understanding of your emotions into physical actions. If the robot detects that you’re sad, it might offer a gentle touch or a soft-spoken word of comfort. If it senses excitement, it might mirror your enthusiasm with more animated gestures.

But here’s the kicker: these algorithms aren’t just about reacting to emotions. They’re also about learning from them. Many humanoid robots are now equipped with machine learning capabilities, allowing them to improve their emotional intelligence over time. The more interactions they have with humans, the better they become at recognizing and responding to emotional cues.

The Ethical Dilemma

Of course, all of this raises some ethical questions. Should robots be designed to understand and respond to human emotions? Is there a risk that we might start relying too much on machines for emotional support? These are questions that researchers and ethicists are still grappling with.

On one hand, emotionally intelligent robots could be incredibly useful in fields like healthcare, where they could provide companionship and emotional support to patients. On the other hand, there’s a concern that we might lose some of our own emotional intelligence if we start outsourcing emotional labor to machines.

Full Circle: The Future of Emotional Intelligence in Robots

So, will humanoid robots ever truly understand human emotions? The answer is complicated. While they may never experience emotions the way humans do, they’re getting better at recognizing and responding to them. And as sensor technology and motion control algorithms continue to evolve, the line between human and robot emotional intelligence will only get blurrier.

In the end, the goal isn’t to create robots that feel emotions but robots that understand them well enough to interact with humans in meaningful ways. Whether that’s a good thing or not? Well, that’s up to you to decide.