Seeing vs. Feeling

Humanoid robots rely on both vision and tactile sensors, but which one is more important for realistic interaction?

By Hiroshi Tanaka

Did you know that over 50% of humanoid robots today use a combination of vision and tactile sensors to navigate their environment? That’s right! While we often think of robots as machines with cameras for eyes, the truth is that tactile sensors are just as crucial to their design. The real question is: which sensor system is more important for creating a truly human-like robot?

In this article, we’ll dive deep into the world of humanoid robot sensors, focusing on the two heavyweights: vision and tactile sensors. We’ll explore how each system works, their strengths and weaknesses, and how they contribute to a robot’s ability to interact with the world. By the end, you’ll have a better understanding of which sensor system is the real MVP in humanoid robot design.

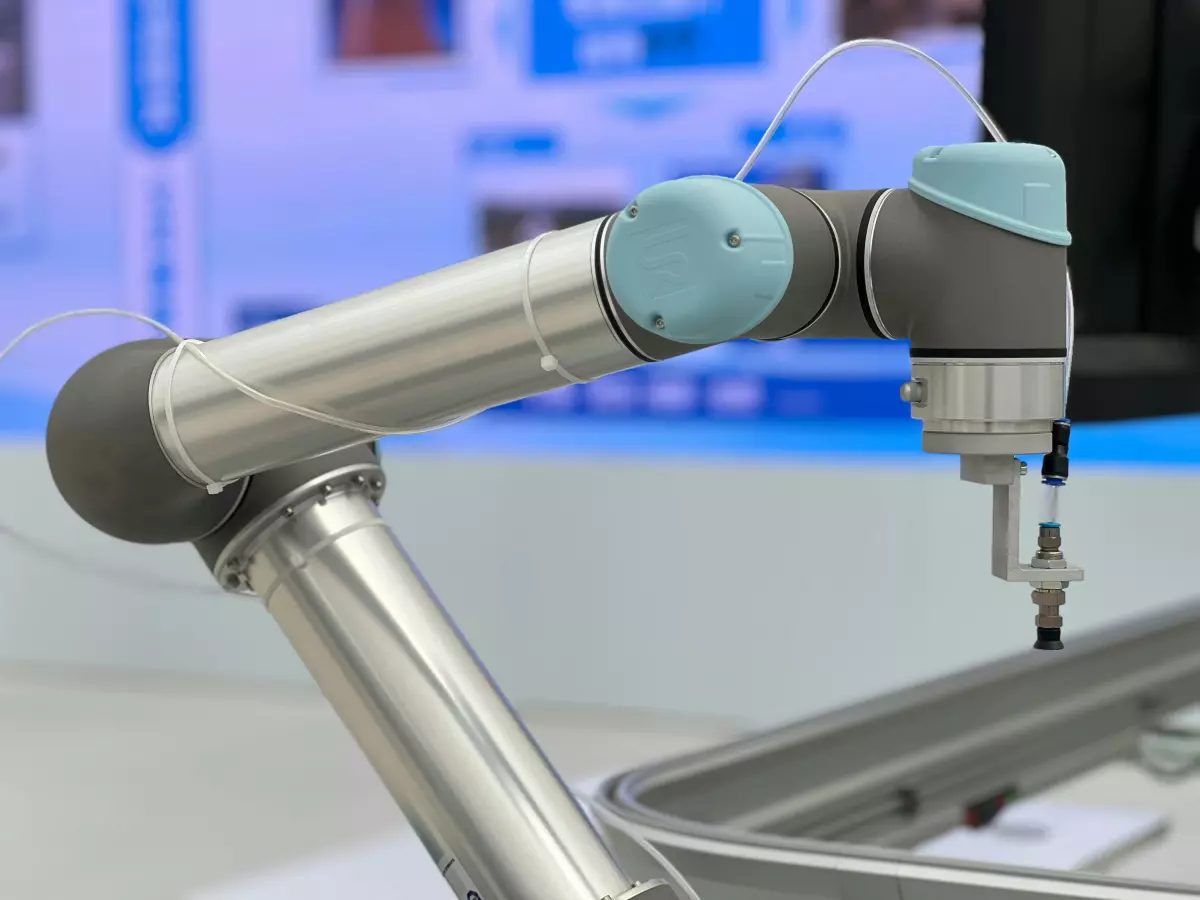

Vision Sensors: The Eyes of the Robot

Vision sensors are often the first thing that comes to mind when we think about humanoid robots. After all, humans rely heavily on sight to interact with the world, so it makes sense that robots would do the same. Vision sensors in humanoid robots typically consist of cameras and depth sensors that allow the robot to 'see' its surroundings.

These sensors are responsible for tasks like object recognition, navigation, and even facial recognition. Advanced vision systems can detect obstacles, track movement, and even interpret human gestures. In short, they give the robot a sense of awareness that’s essential for interacting with the environment.

But vision sensors aren’t perfect. They can struggle in low-light conditions, have difficulty interpreting complex textures, and often require significant processing power to analyze the data they collect. Plus, they can’t 'feel' objects, which is where tactile sensors come in.

Tactile Sensors: The Robot’s Sense of Touch

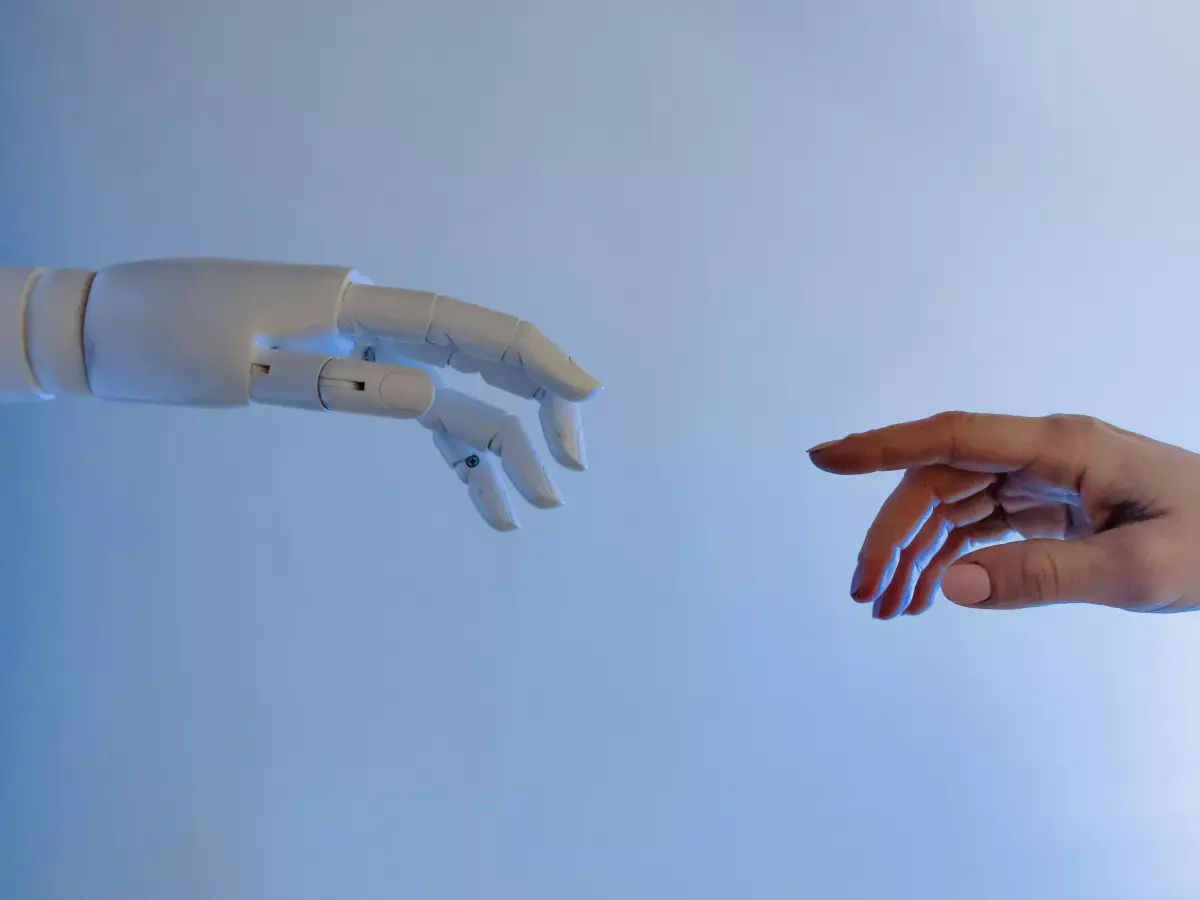

While vision sensors let robots see, tactile sensors allow them to feel. These sensors are embedded in the robot’s skin, hands, and sometimes even feet, giving it the ability to detect pressure, texture, and temperature. Tactile sensors are crucial for tasks that require delicate manipulation, like picking up fragile objects or interacting with humans.

Imagine a robot trying to shake your hand. Without tactile sensors, it would have no idea how much pressure to apply, potentially crushing your hand in the process. Tactile sensors provide the feedback necessary for the robot to adjust its grip in real-time, making interactions smoother and more human-like.

However, tactile sensors also have their limitations. They can’t provide the same level of environmental awareness as vision sensors, and they’re often more difficult to integrate into a robot’s design. Plus, they require constant calibration to ensure accurate feedback.

Which is More Important?

So, which sensor system is more important for humanoid robots: vision or tactile? The answer, unsurprisingly, is that both are equally important but for different reasons. Vision sensors provide the robot with a broad understanding of its environment, allowing it to navigate and recognize objects. Tactile sensors, on the other hand, give the robot the fine-tuned control it needs to interact with those objects in a meaningful way.

Think of it this way: vision sensors are like the robot’s GPS, guiding it through the world, while tactile sensors are like its hands, allowing it to interact with that world. Without vision, the robot would be blind, but without touch, it would be clumsy and imprecise.

In recent years, researchers have been working on integrating both sensor systems more seamlessly. For example, some humanoid robots now use vision sensors to detect an object and then switch to tactile sensors for fine manipulation. This combination of 'seeing' and 'feeling' allows robots to perform tasks that were previously impossible, like assembling delicate electronics or performing surgery.

The Future of Sensor Integration

The future of humanoid robots lies in the seamless integration of vision and tactile sensors. As AI and machine learning algorithms continue to improve, robots will become better at processing the data from both sensor systems in real-time, allowing for more fluid and natural interactions.

One exciting area of research is the development of 'soft' robots, which use flexible materials and advanced tactile sensors to mimic the feel of human skin. These robots could one day be used in healthcare, where a soft touch is often more important than brute strength.

Another promising development is the use of AI to enhance the robot’s ability to interpret sensory data. For example, AI could help a robot 'learn' how to adjust its grip based on the texture and weight of an object, making it more adaptable and versatile.

Final Thoughts

At the end of the day, both vision and tactile sensors are essential for creating humanoid robots that can interact with the world in a human-like way. Vision sensors provide the robot with a broad understanding of its environment, while tactile sensors give it the fine-tuned control it needs to interact with that environment. The real magic happens when these two systems work together, allowing robots to see and feel their way through the world.

As sensor technology continues to evolve, we can expect humanoid robots to become even more capable and lifelike. Who knows? In a few years, we might be shaking hands with robots that can not only see us but also feel the warmth of our skin.

As roboticist Rodney Brooks once said, 'The real challenge in robotics is not making robots that can think, but robots that can feel.'