Robots need better senses

Imagine a robot that can see, hear, and feel just like you. Now, imagine it trying to pick up a glass of water. Sounds simple, right? Well, not quite. Despite all the advancements in robotics, robots still struggle with basic tasks that humans do without thinking. Why? Because they lack one critical element: human-like senses.

By Dylan Cooper

Sure, robots can be programmed to perform complex calculations, navigate tricky environments, or even beat humans at chess. But when it comes to interacting with the real world, they often fall short. The reason is simple: while robots have sensors, they don't have senses. And there's a big difference between the two.

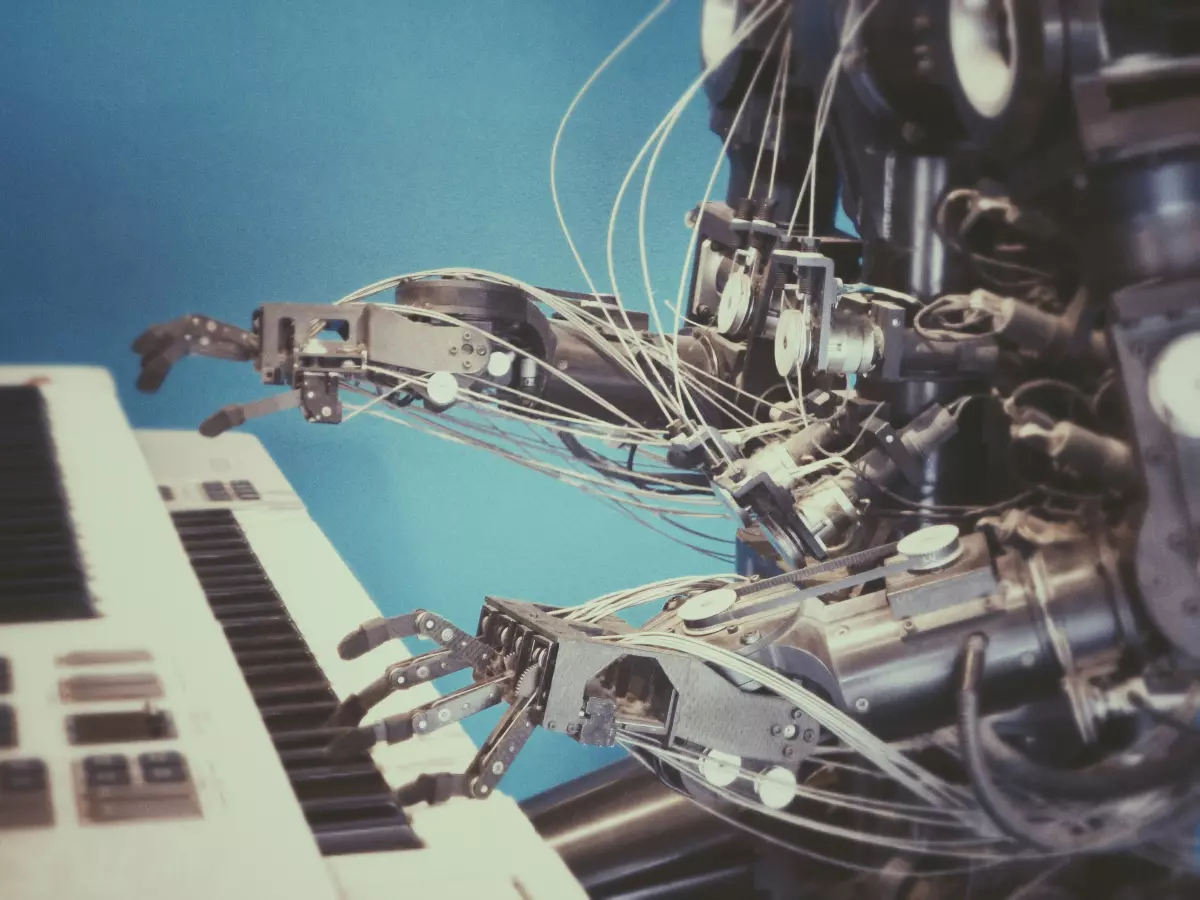

Take vision, for example. Most robots today rely on cameras and computer vision algorithms to "see" their surroundings. But these systems are nowhere near as sophisticated as the human eye. They struggle with depth perception, object recognition, and even understanding context. A robot might be able to identify a cup on a table, but it won't necessarily know how to pick it up without spilling the contents.

And it's not just vision. Robots also lack the ability to feel in the way humans do. While some robots are equipped with touch sensors, these are often rudimentary and can't replicate the complex sensations of human skin. This makes it difficult for robots to handle delicate objects or perform tasks that require fine motor skills.

Why senses matter

So, why is this such a big deal? Well, if robots are ever going to truly integrate into our daily lives, they need to be able to interact with the world in a more human-like way. This means not just seeing and touching, but also hearing, smelling, and even tasting. Imagine a robot chef that could taste food as it cooks or a robot doctor that could diagnose illnesses by smell. The possibilities are endless, but they all depend on one thing: giving robots better senses.

Of course, this is easier said than done. Developing sensors that can mimic human senses is a huge challenge. For one thing, human senses are incredibly complex. Take touch, for example. The human skin contains thousands of nerve endings that allow us to feel everything from temperature to pressure to pain. Replicating this in a robot is no small feat.

Then there's the issue of processing all this sensory information. The human brain is a master at interpreting sensory data and making split-second decisions based on it. Robots, on the other hand, often struggle to process even basic sensory input in real-time. This is where advancements in AI and machine learning come into play. By teaching robots to better interpret sensory data, we can help them make smarter decisions and perform more complex tasks.

The future of robot senses

So, what does the future hold? Well, we're already seeing some exciting developments in this area. Researchers are working on everything from artificial skin that can sense temperature and pressure to robots that can "see" in 3D using advanced cameras and sensors. There's even work being done on robots that can "smell" using chemical sensors.

But we're still a long way from creating robots that can truly replicate human senses. For now, most robots are still limited by their basic sensors and lack the ability to fully understand and interact with the world around them. But as technology continues to advance, it's only a matter of time before we see robots with senses that rival our own.

So, here's the big question: will robots ever be able to fully replicate human senses? And if they do, what will that mean for the future of robotics? Will we see robots that can cook, clean, and even diagnose illnesses? Or will there always be a gap between human and robot capabilities?

One thing's for sure: the future of robotics is going to be exciting. And it all starts with giving robots better senses.