The Evolution of Control

Robots are getting smarter, but most people think it's all about AI. Spoiler alert: it's not.

By Kevin Lee

When people think about robots, they often imagine humanoid machines powered by cutting-edge artificial intelligence, capable of learning and adapting to their environment. The popular belief is that AI is the sole driver behind the incredible advancements in robotics. However, while AI plays a significant role, the evolution of robot control systems is just as important. Without these systems, robots would still be stuck in the mechanical age, performing only the most basic, pre-programmed tasks.

On the other hand, modern robots are far more autonomous, capable of complex decision-making and precise movements. But how did we get here? The journey from simple automation to advanced control systems is a fascinating one, filled with breakthroughs in hardware, software, and control theory.

The Early Days: Hardwired Automation

In the early days of robotics, control systems were rudimentary at best. Robots were hardwired to perform specific tasks, with little to no flexibility. Think of the classic industrial robots you see in car manufacturing plants. These machines were designed to perform repetitive tasks like welding or assembling parts, but they had no ability to adapt to changes in their environment. If something went wrong, the robot would either stop or continue making the same mistake over and over.

The control systems in these early robots were based on simple feedback loops, where sensors would provide basic information about the robot's position or speed, and the system would make minor adjustments to keep things on track. But there was no room for learning or adaptation. These robots were essentially glorified machines, not much different from the assembly lines of the early 20th century.

The Rise of Programmable Logic Controllers (PLCs)

The introduction of programmable logic controllers (PLCs) in the 1960s marked a significant leap forward in robot control systems. PLCs allowed robots to be programmed with more complex instructions, enabling them to handle a wider range of tasks. Instead of being hardwired for a single function, robots could now be reprogrammed to perform different tasks without needing to be physically modified.

PLCs also introduced more sophisticated control algorithms, allowing robots to make more precise movements and respond to changes in their environment. However, these systems were still limited by the fact that they relied on pre-programmed instructions. The robot could only do what it was told, and there was no room for real-time decision-making or adaptation.

Enter AI and Machine Learning

The real game-changer in robot control systems came with the advent of artificial intelligence and machine learning. These technologies allowed robots to move beyond simple automation and into the realm of autonomy. Instead of relying solely on pre-programmed instructions, robots could now learn from their environment and make decisions in real-time.

Machine learning algorithms enable robots to analyze vast amounts of data from their sensors and use that information to improve their performance over time. For example, a robot equipped with machine learning capabilities can learn to navigate a complex environment by trial and error, gradually improving its ability to avoid obstacles and reach its destination.

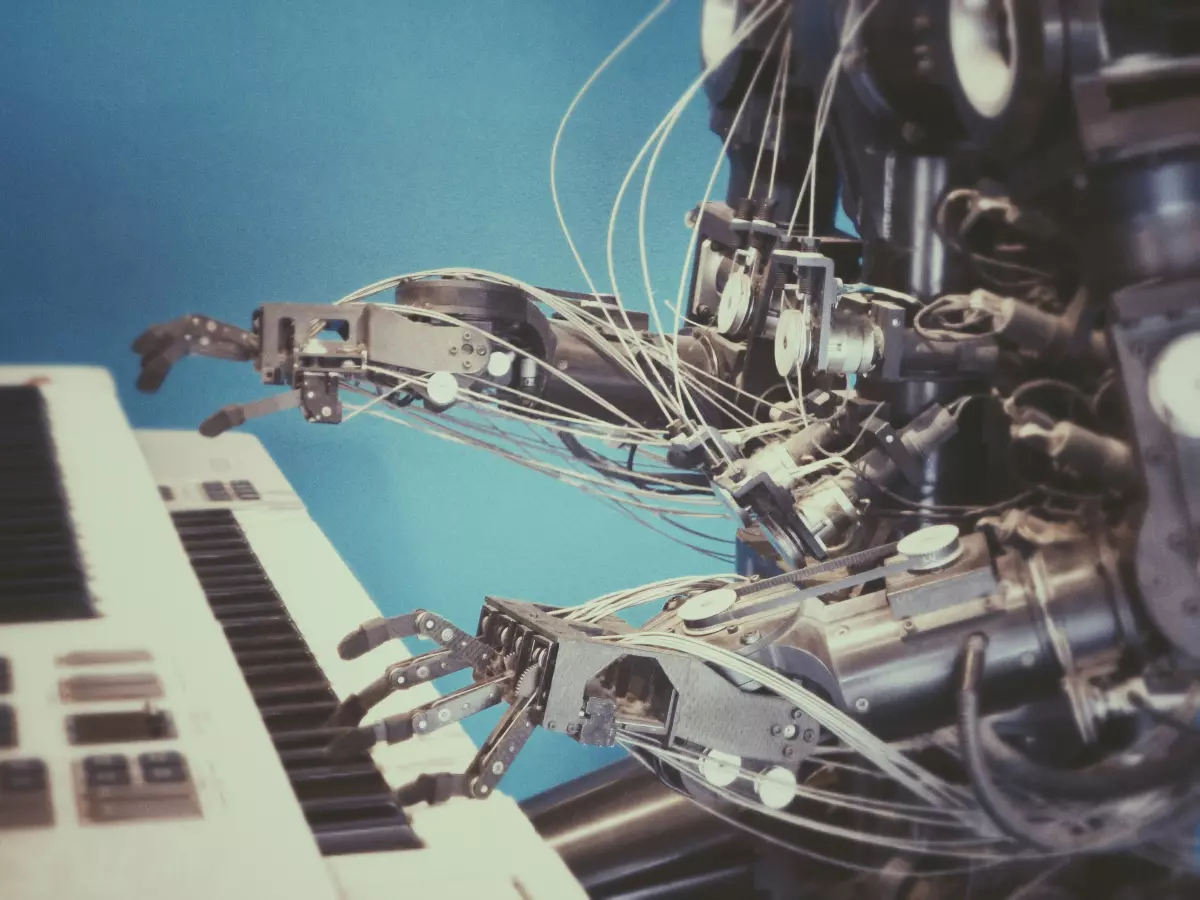

This shift from pre-programmed control to AI-driven autonomy has opened up a world of possibilities for robotics. Robots are now capable of performing tasks that were once thought to be the exclusive domain of humans, such as driving cars, performing surgery, and even exploring other planets.

The Future: Adaptive Control Systems

So, where do we go from here? The future of robot control systems lies in adaptive control, where robots can not only learn from their environment but also adapt their behavior in real-time. This means that robots will be able to handle unexpected situations more effectively, making them even more autonomous and capable.

One area of research that holds great promise is the development of control systems that can mimic the way humans learn and adapt. By studying how the human brain processes information and makes decisions, researchers hope to create robots that can learn and adapt in much the same way. This could lead to robots that are not only more autonomous but also more intuitive and capable of working alongside humans in a wide range of environments.

In conclusion, while AI often steals the spotlight, the evolution of robot control systems is just as crucial to the development of modern robotics. From the early days of hardwired automation to the rise of AI-driven autonomy, control systems have come a long way. And with the advent of adaptive control, the future of robotics looks brighter than ever.

Fun fact: The first industrial robot, Unimate, was installed in a General Motors plant in 1961. It weighed over 4,000 pounds and was used to lift hot pieces of metal!