Hand-Eye Sync

Humans have been perfecting hand-eye coordination for millennia, from throwing spears to typing on smartphones. But humanoid robots? They're just getting started.

By Alex Rivera

When you think about catching a ball or threading a needle, it’s easy to forget the sheer complexity behind such tasks. Your brain processes visual information, calculates distances, and sends precise signals to your hand muscles—all in a split second. Now, imagine trying to replicate that in a humanoid robot. It’s like asking a toddler to perform brain surgery. But thanks to advances in sensor integration, motion control algorithms, and AI, humanoid robots are starting to get the hang of it. Well, sort of.

Humanoid robots rely on a combination of cameras (their eyes) and actuators (their muscles) to mimic this coordination. But unlike humans, who have millions of years of evolution to thank, robots need meticulously programmed algorithms to pull off even the simplest tasks. The real challenge? Synchronizing their sensors and motors to work in harmony, without turning into a clumsy mess of metal and wires.

The Role of Vision Sensors

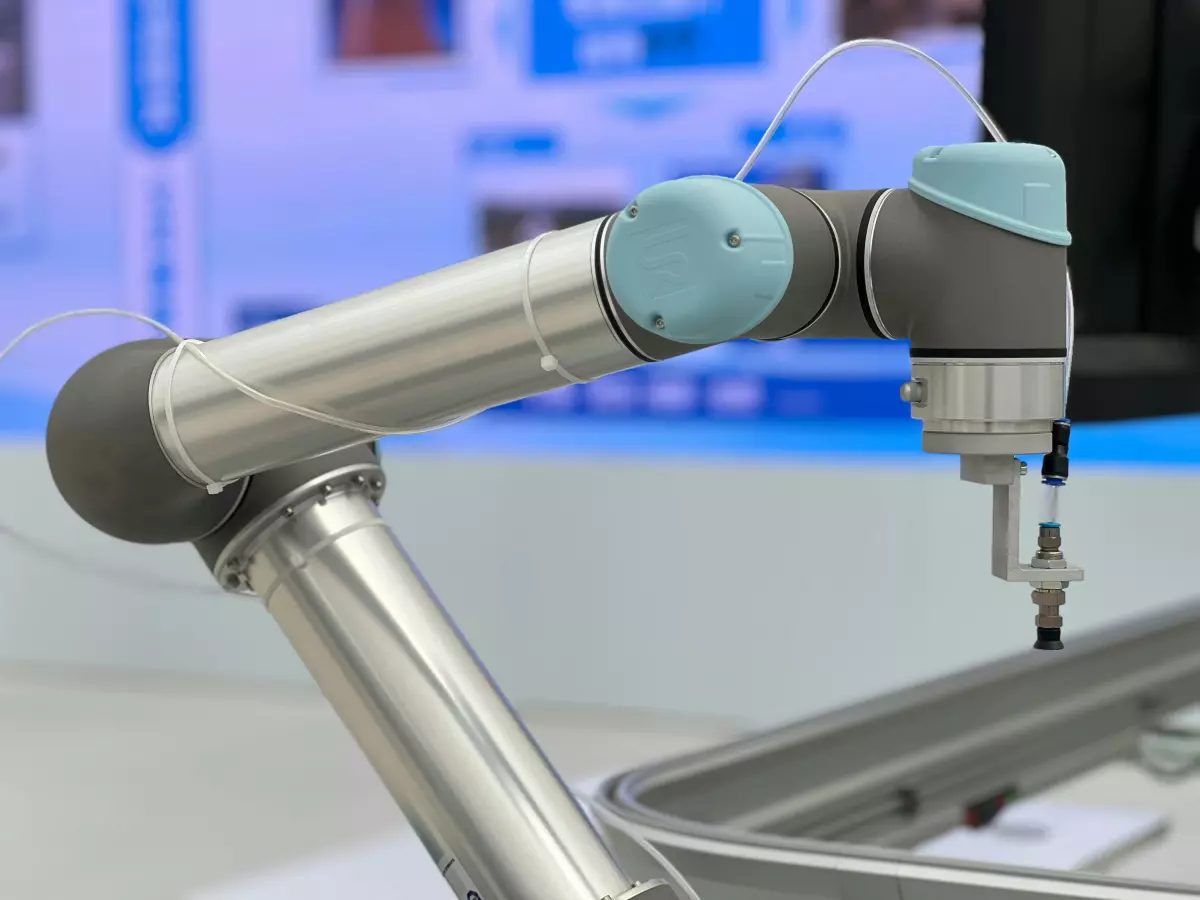

Let’s start with the eyes—or in this case, the vision sensors. Most humanoid robots use stereo cameras or depth sensors to perceive the world in 3D. These sensors capture images and calculate the distance to objects, much like how our two eyes work together to give us depth perception. But here’s the catch: robots don’t have a brain that can instantly process this data. Instead, they rely on algorithms to interpret the visual information and make decisions.

For example, when a humanoid robot reaches for an object, its vision sensors first identify the object’s location. Then, the robot’s AI estimates the object’s size, shape, and distance. This data is fed into motion control algorithms, which calculate the precise movements needed to reach out and grab the object. Sounds simple, right? Well, not quite.

Motion Control: The Muscle Behind the Movement

Once the robot’s sensors have done their job, it’s time for the actuators to take over. Actuators are the motors that control the robot’s limbs, and they need to be incredibly precise to avoid overshooting or undershooting the target. This is where motion control algorithms come into play. These algorithms translate the sensor data into specific commands for the actuators, ensuring that the robot’s hand moves smoothly and accurately.

But here’s the tricky part: the robot’s sensors and actuators don’t always work at the same speed. Sensors can capture data in milliseconds, while actuators may take longer to respond. This delay can cause the robot to miss its target or make jerky movements. To solve this, engineers use predictive algorithms that anticipate the actuator’s response time and adjust the robot’s movements accordingly. It’s like trying to predict where a moving car will be in five seconds and adjusting your steering wheel ahead of time.

AI: The Brain Behind the Operation

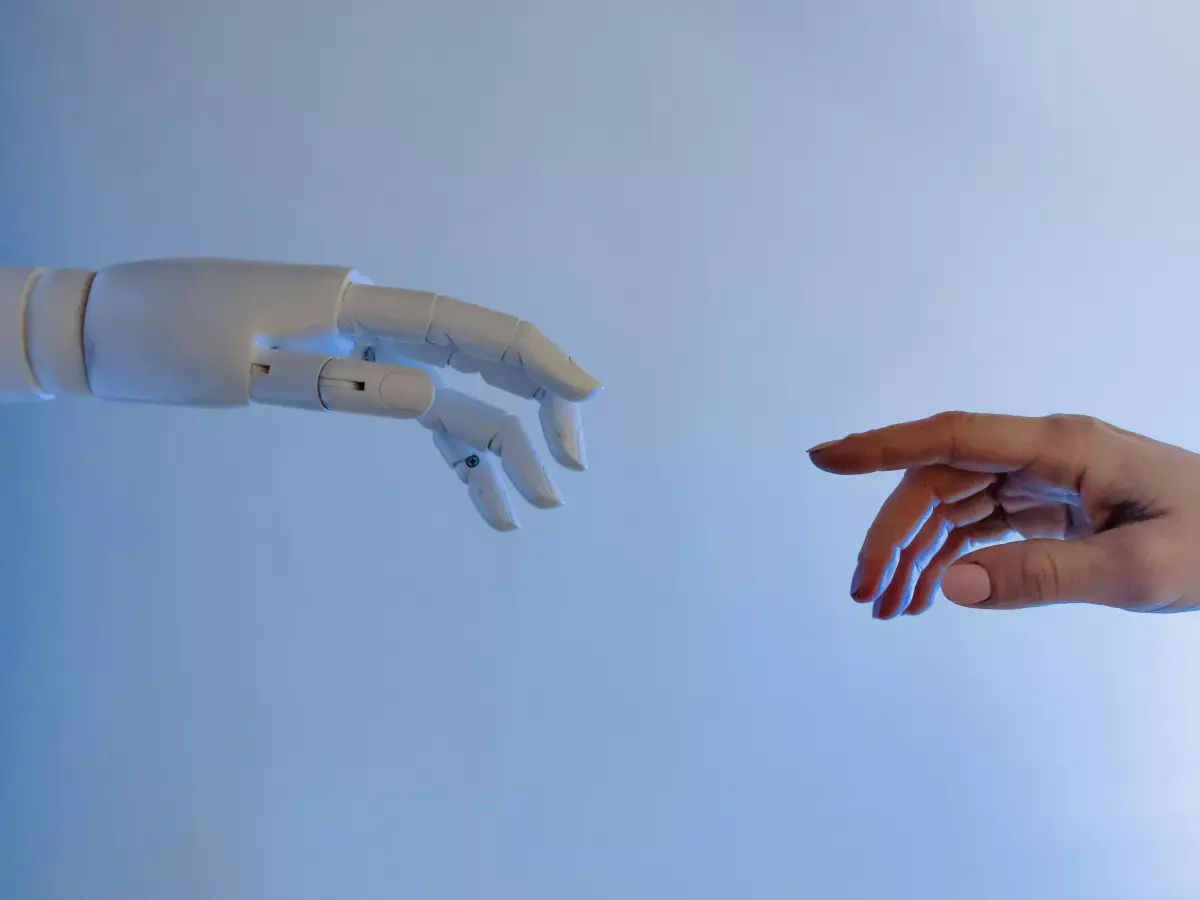

Of course, none of this would be possible without AI. While sensors and actuators provide the hardware, AI is the software that ties everything together. Machine learning algorithms allow humanoid robots to improve their hand-eye coordination over time, learning from their mistakes and refining their movements. Think of it as the robot’s version of muscle memory.

For instance, if a robot repeatedly fails to grab an object, its AI can analyze the errors and adjust its motion control algorithms for future attempts. Over time, the robot becomes more accurate and efficient, much like how humans improve their coordination through practice.

The Future of Hand-Eye Coordination

So, what’s next for humanoid robots and their quest for perfect hand-eye coordination? As AI continues to evolve, we can expect robots to become even more skilled at tasks that require fine motor skills. From assembling delicate electronics to performing surgery, the possibilities are endless. But for now, let’s just be glad they can pick up a cup of coffee without spilling it everywhere.

In the end, the journey toward perfect hand-eye coordination in humanoid robots is far from over. But with advances in sensor technology, motion control algorithms, and AI, they’re getting closer every day. And who knows? Maybe one day, they’ll be able to beat us at our own game—literally.