Fluid Motion

Imagine a humanoid robot moving like a professional dancer, gliding effortlessly across the floor. It’s not just about the mechanics; it’s about the harmony between sensors and algorithms, like a perfectly choreographed ballet.

By Hannah White

When we think of humanoid robots, we often picture stiff, jerky movements—like a marionette with tangled strings. For years, that was the reality. Early robots struggled to replicate the fluidity of human motion, often looking more like malfunctioning machines than the graceful beings we see today in sci-fi movies. But the future of robotics has taken a surprising turn.

On the other hand, modern humanoid robots are now capable of movements so smooth, you might mistake them for humans from a distance. How did we get here? The answer lies in the intricate dance between sensor integration and motion control algorithms. These two elements work together like the brain and muscles of a human body, constantly communicating to create seamless motion.

Why Fluid Motion Matters

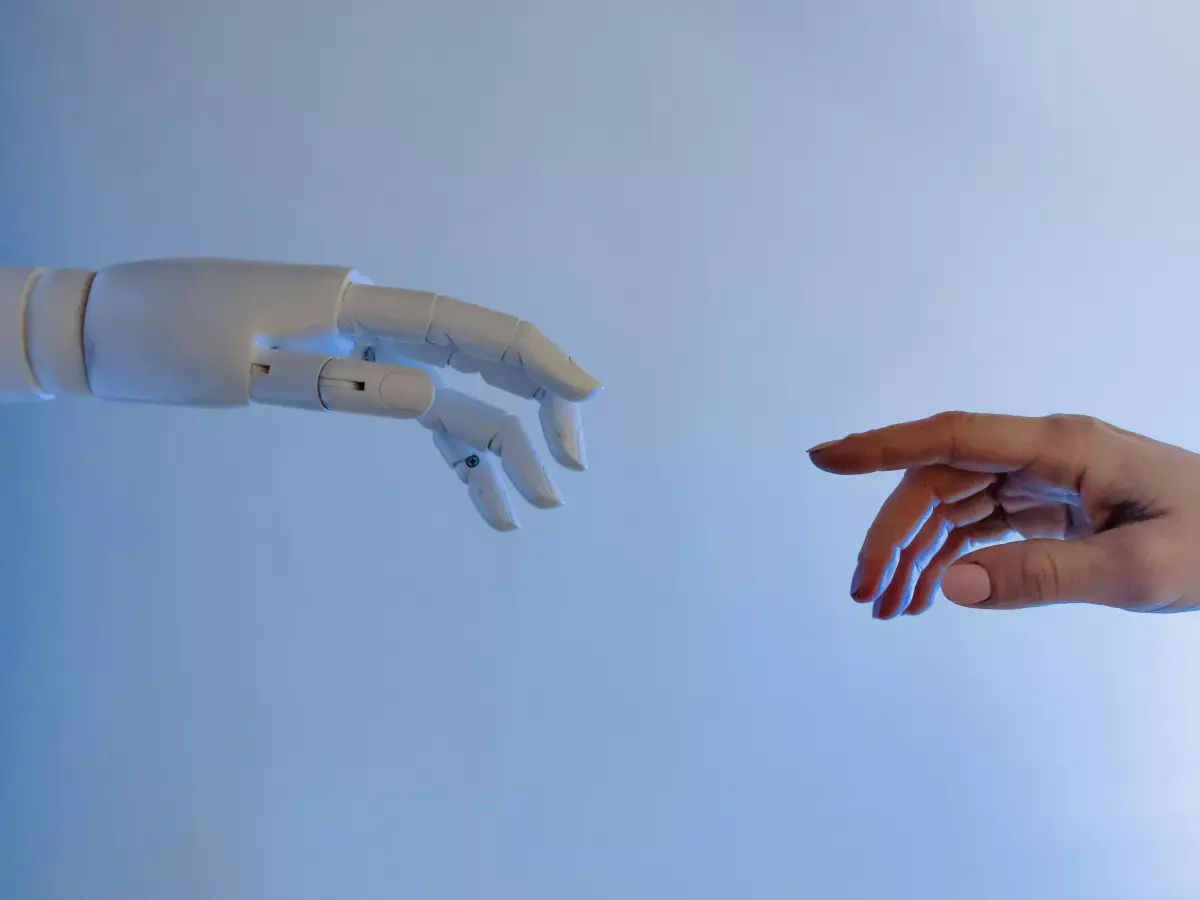

Let’s be real: nobody wants a robot that moves like it’s stuck in slow motion. Whether it’s a robot assistant in your home or a humanoid in a factory, fluid motion is key to both functionality and safety. Imagine a robot trying to hand you a cup of coffee but jerking its arm so hard that it spills everywhere. Not ideal, right?

Fluid motion isn’t just about aesthetics; it’s about precision and control. In environments where robots interact with humans, smooth movements reduce the risk of accidents and make interactions feel more natural. But how do robots achieve this level of control?

The Role of Sensors

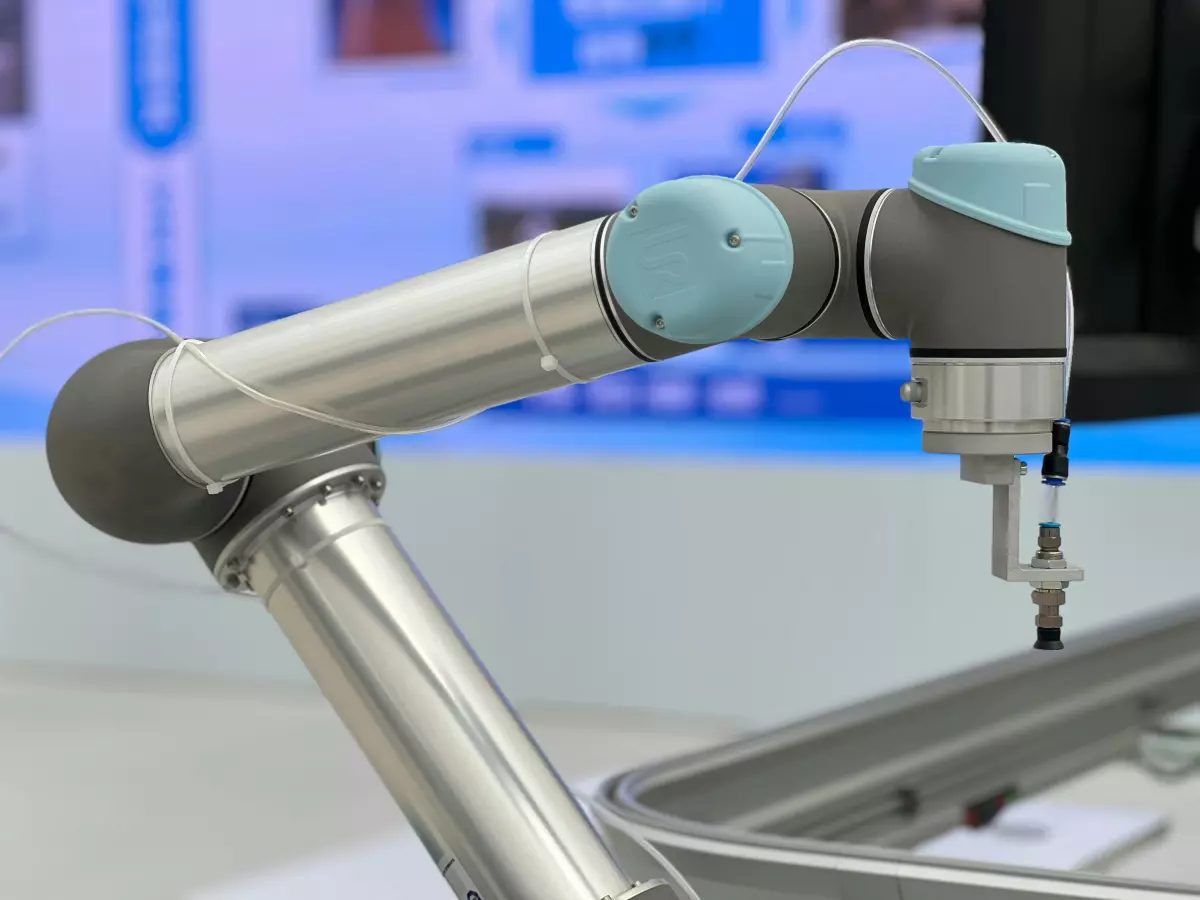

Think of sensors as the robot’s eyes, ears, and skin. They provide real-time data about the robot’s surroundings, much like how our senses help us navigate the world. For humanoid robots, sensors are crucial for everything from balance to object manipulation.

In modern designs, sensors are integrated into nearly every part of the robot’s body. Accelerometers, gyroscopes, and force sensors work together to monitor the robot’s position, speed, and the forces acting on its limbs. This data is fed into the robot’s control system, allowing it to adjust its movements in real-time. It’s like a self-driving car constantly scanning the road and making micro-adjustments to stay in its lane.

Motion Control Algorithms: The Brain Behind the Moves

While sensors provide the data, motion control algorithms are the brains that make sense of it all. These algorithms process the sensor data and translate it into precise movements. It’s like the difference between knowing where your hand is and actually being able to move it to pick up a glass of water.

One of the key challenges in humanoid robot motion control is dealing with the complexity of human-like movements. Walking, for example, isn’t just about putting one foot in front of the other. It involves balancing, shifting weight, and coordinating multiple joints—all while reacting to changes in the environment. Motion control algorithms need to account for all these factors to create smooth, lifelike movement.

Advanced algorithms use techniques like inverse kinematics and predictive modeling to calculate the optimal movements for each joint. Inverse kinematics helps the robot figure out how to move its limbs to achieve a specific goal, like reaching for an object. Predictive modeling, on the other hand, allows the robot to anticipate changes in its environment and adjust its movements accordingly.

Sensor Fusion: The Secret Sauce

So, how do sensors and algorithms work together to create fluid motion? The answer lies in sensor fusion. This technique combines data from multiple sensors to create a more accurate picture of the robot’s environment. It’s like how your brain combines information from your eyes and ears to help you understand what’s happening around you.

For example, a humanoid robot might use data from its accelerometers to detect that it’s tilting to one side. At the same time, its gyroscopes might detect a change in its rotational speed. By fusing this data together, the robot can make real-time adjustments to its posture, preventing it from falling over.

Sensor fusion also helps robots deal with uncertainty. In the real world, sensor data is often noisy or incomplete. By combining data from multiple sources, the robot can filter out the noise and make more accurate decisions about how to move.

The Future of Fluid Motion

As sensor technology and motion control algorithms continue to evolve, humanoid robots are only going to get better at moving like us. We’re already seeing robots that can dance, run, and even perform complex tasks like surgery. But the ultimate goal is to create robots that move so naturally, you forget they’re robots at all.

So, the next time you see a humanoid robot gliding across the floor, remember: it’s not just a machine. It’s a carefully orchestrated symphony of sensors and algorithms, working together to create the illusion of life.