Face the Future

Imagine a humanoid robot smiling at you. Not a creepy, stiff grin, but a warm, genuine smile that almost makes you forget it's a machine. Sounds like sci-fi, right? Well, it's closer to reality than you think.

By Dylan Cooper

Picture this: You're at a tech expo, and a humanoid robot greets you with a smile. You wave, and it raises an eyebrow, its face reflecting curiosity. You crack a joke, and it chuckles, its eyes crinkling in amusement. For a moment, you wonder, "Is this thing... human?" That’s the magic of facial expression design in humanoid robots. But how do these machines manage to pull off such lifelike expressions? Spoiler: It’s not magic—it’s a blend of clever design, sensor integration, and some seriously advanced motion control algorithms.

Humanoid robots are no longer just about walking and talking. The next frontier? Making them feel—or at least look like they do. And that starts with the face. After all, our faces are the ultimate communication tool, expressing everything from joy to confusion with just a twitch of a muscle. But for robots, achieving that same level of expressiveness is no easy task.

The Anatomy of a Robotic Face

Let’s break it down. A human face has around 43 muscles that work together to create thousands of expressions. For robots, mimicking this complexity requires a combination of actuators (which are like robotic muscles), flexible materials, and sensors that can detect subtle changes in the environment or user interactions.

Designers often use soft, flexible materials like silicone to give the robot’s face a more human-like appearance. But it’s not just about looking the part. These materials need to be able to stretch and move in ways that mimic human skin. Underneath, actuators are placed strategically to simulate the movement of facial muscles. These actuators are controlled by algorithms that determine which 'muscles' to activate based on the robot's intended expression.

For example, if the robot is programmed to smile, the actuators around the mouth will pull the corners of the lips upward, while others around the eyes will create the subtle wrinkles that make a smile look genuine. It’s all about the details.

Sensors: The Eyes and Ears of Expression

But how do these robots know when to smile, frown, or raise an eyebrow? That’s where sensors come in. Just like humans rely on their senses to react to the world, robots use sensors to gather information about their environment and the people they interact with.

Facial recognition cameras, for instance, can help a robot detect whether you're smiling or frowning. Microphones can pick up on the tone of your voice, giving the robot clues about how you're feeling. Some advanced robots even use thermal sensors to detect changes in body temperature, which can indicate stress or excitement.

Once the robot has gathered this data, it uses algorithms to decide how to respond. If it detects that you're smiling, it might smile back. If it senses confusion, it could raise an eyebrow or tilt its head slightly, mimicking the way humans react when they're trying to understand something.

Motion Control Algorithms: The Brain Behind the Face

Of course, none of this would be possible without the right algorithms. These are the brains behind the operation, telling the robot how to move its 'muscles' to create the desired expression. But here’s the tricky part: Human expressions are rarely static. We’re constantly moving our faces, even when we’re not talking. Think about how your eyebrows might twitch when you’re thinking or how your lips purse when you’re concentrating. For robots to appear truly lifelike, they need to replicate these subtle, unconscious movements.

This is where motion control algorithms come into play. These algorithms are designed to create smooth, natural movements by controlling the timing and intensity of the actuators. They also take into account the robot’s overall body language, ensuring that facial expressions match up with gestures and posture. After all, a smile without eye contact or a raised eyebrow without a head tilt would look pretty weird, right?

Some of the most advanced humanoid robots use AI-driven algorithms that allow them to learn from their interactions. Over time, they can improve their ability to read human emotions and respond with more appropriate and nuanced expressions. It’s like giving the robot a crash course in emotional intelligence.

The Future of Robotic Expressions

So, what’s next for humanoid robots and their facial expressions? Well, the goal is to make these robots even more lifelike. Researchers are working on developing more advanced sensors that can detect even the smallest changes in human emotion, as well as more flexible materials that can mimic the full range of human facial movements.

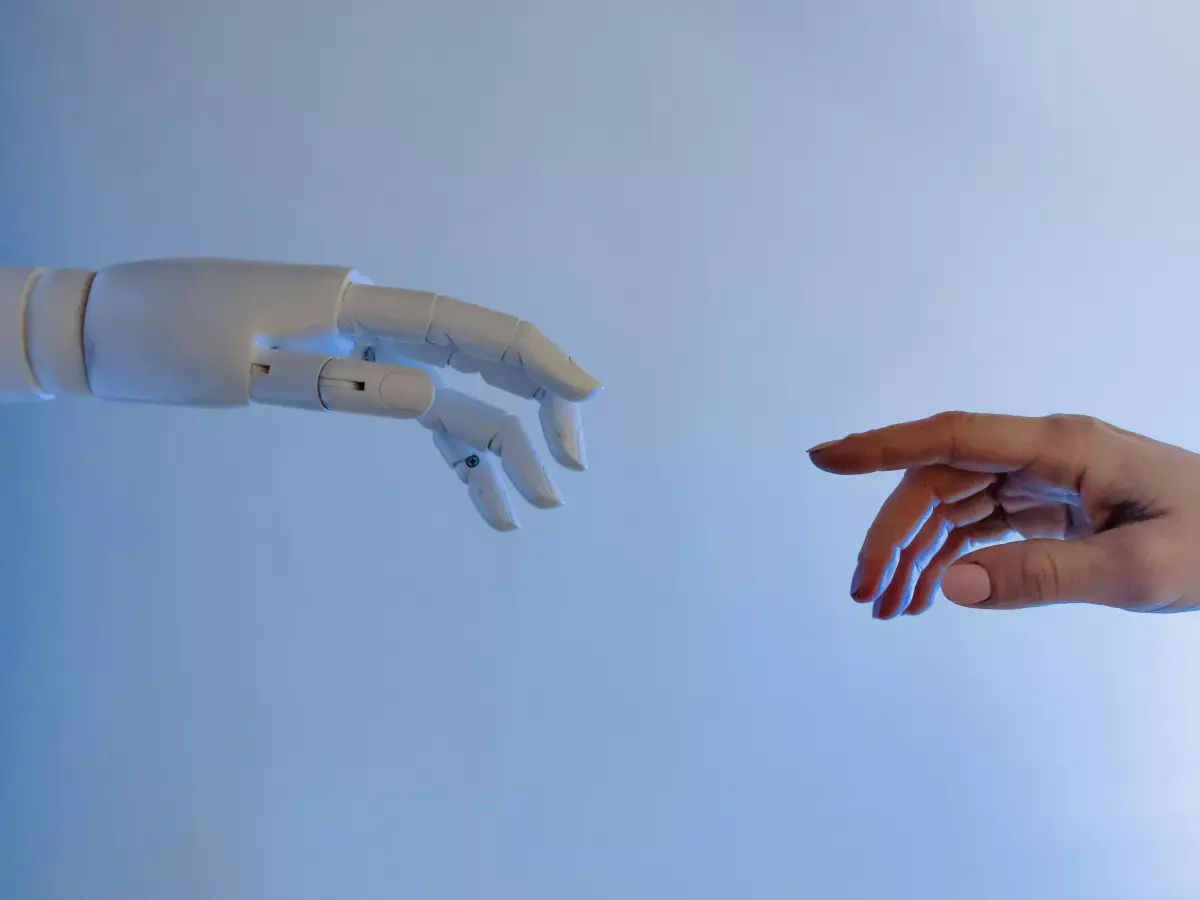

There’s also a growing interest in making robots more emotionally intelligent. This means not only recognizing human emotions but also responding in ways that are socially appropriate. For example, a robot that can detect when someone is feeling sad and offer a comforting smile—or even a few kind words—could have huge implications for industries like healthcare, customer service, and education.

But let’s not get too ahead of ourselves. While we’re not quite at the point where robots can fully replicate human emotions, we’re getting closer every day. And who knows? The next time you meet a humanoid robot, you might just find yourself smiling back.