Innovations in Motion

Did you know that the average humanoid robot has over 30 sensors working together to mimic human movement? And that's just the beginning of the story.

By Jason Patel

Humanoid robots are no longer the stuff of sci-fi. They're here, and they're getting better at moving, sensing, and interacting with the world around them. But here's the kicker: it's not just about giving them more sensors. It's about where those sensors go and how they work together. The placement of sensors in humanoid robots is a game of precision, and recent innovations are pushing the boundaries of what's possible.

So, what's the secret sauce? How are engineers and roboticists making these machines move more like us? Let's dive into the seven key innovations in humanoid robot sensor placement that are changing the game.

1. Distributed Sensor Networks

One of the most exciting developments in humanoid robots is the use of distributed sensor networks. Instead of relying on a few centralized sensors, robots now use a network of smaller sensors spread across their bodies. This allows for more accurate data collection and faster response times. Think of it like your nervous system—sensors in your skin, muscles, and joints all work together to give you a sense of touch and movement. Robots are now doing the same, and it's making them more responsive and lifelike.

2. Tactile Sensors in Unexpected Places

When you think of tactile sensors, you probably imagine them in the hands or feet of a robot. But recent innovations have seen tactile sensors placed in unexpected areas, like the robot's torso or even its face. Why? Because these areas can provide crucial feedback about the robot's environment, helping it navigate tight spaces or interact with objects more delicately. It's like giving the robot a sixth sense, allowing it to 'feel' its surroundings in ways we never thought possible.

3. Multi-Sensor Fusion

Multi-sensor fusion is a fancy way of saying that robots are getting better at combining data from different types of sensors. For example, a robot might use visual sensors (cameras) to detect an object and tactile sensors to determine its texture. By fusing this data, the robot can make more informed decisions about how to interact with the object. This is especially important for tasks that require precision, like picking up fragile items or performing delicate surgeries.

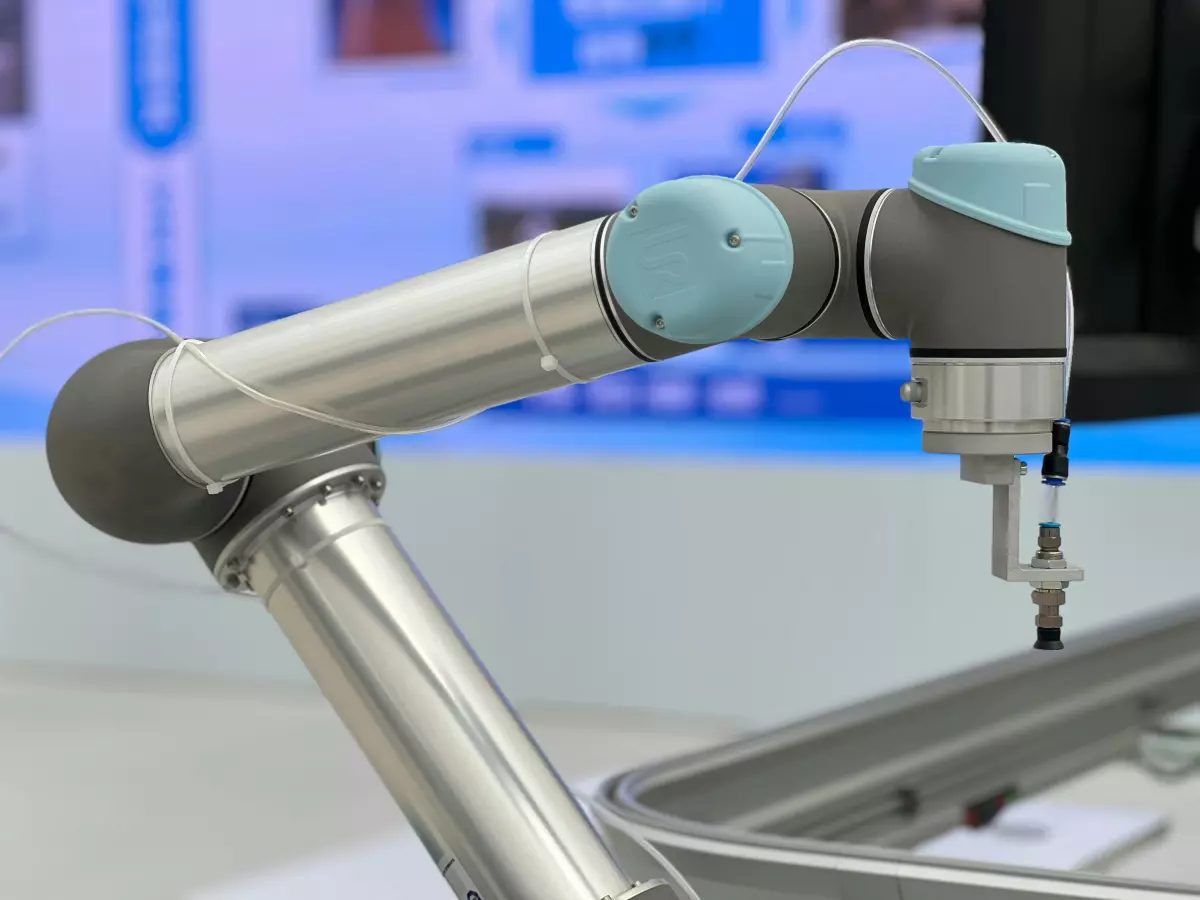

4. Advanced Inertial Measurement Units (IMUs)

IMUs are sensors that measure a robot's acceleration, orientation, and angular velocity. In the past, these sensors were bulky and not very accurate. But recent advancements have made IMUs smaller, more precise, and more energy-efficient. This means that humanoid robots can now move more fluidly and maintain better balance, even in challenging environments. It's like giving the robot a better sense of balance, allowing it to walk, run, and even jump with greater ease.

5. Pressure-Sensitive Feet

Walking is one of the most complex tasks for a humanoid robot, and it all starts with the feet. Recent innovations in pressure-sensitive foot sensors are helping robots walk more naturally. These sensors can detect subtle changes in pressure as the robot's foot makes contact with the ground, allowing it to adjust its gait in real-time. This is crucial for navigating uneven terrain or walking up stairs—tasks that were once nearly impossible for humanoid robots.

6. Vision-Based Sensors for Motion Prediction

Another game-changer is the use of vision-based sensors to predict motion. By analyzing visual data from cameras, humanoid robots can anticipate the movement of objects or people in their environment. This allows them to react more quickly and avoid collisions. It's like giving the robot a pair of eyes that not only see but also 'think' about what might happen next. This is especially useful in crowded environments, where quick reflexes are essential.

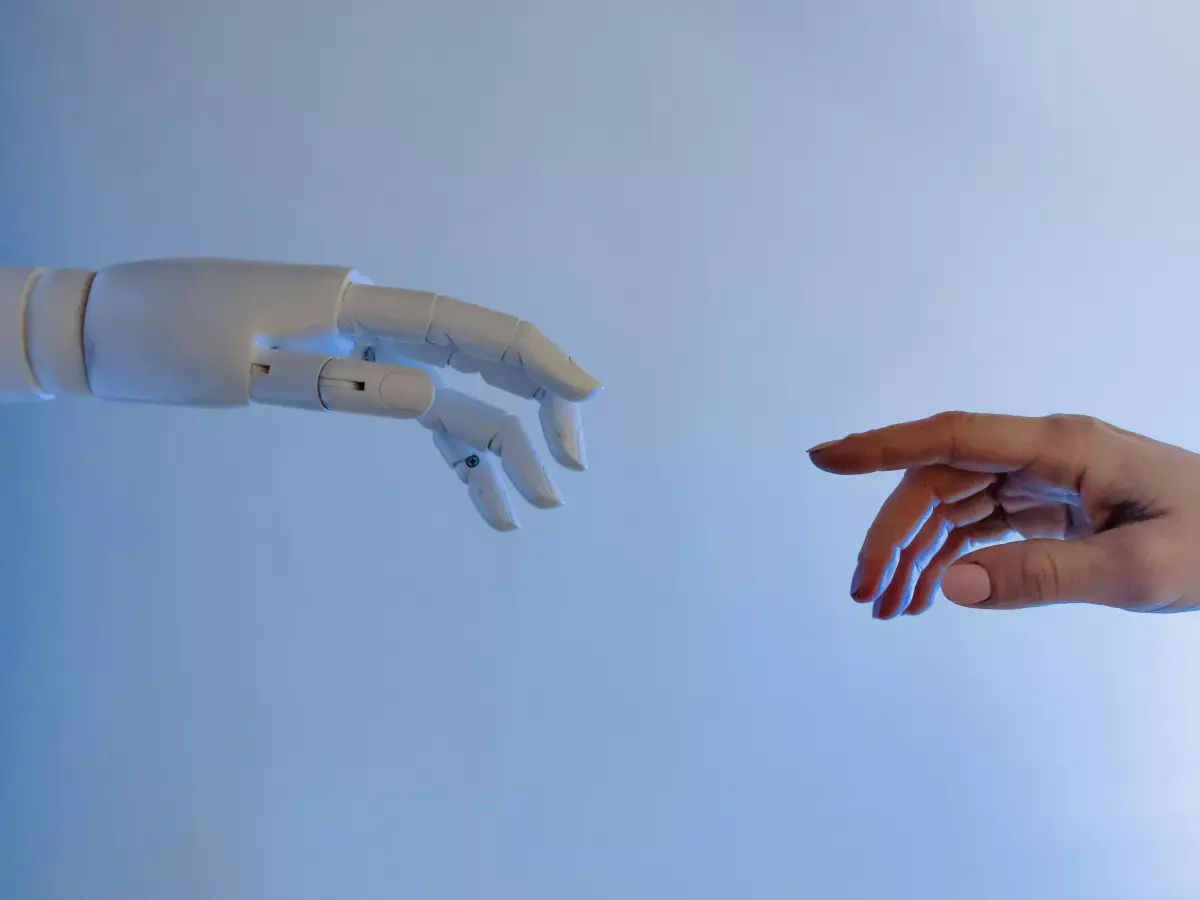

7. Bio-Inspired Sensor Placement

Finally, one of the most exciting trends in humanoid robot design is the use of bio-inspired sensor placement. Engineers are studying how sensors are distributed in the human body and applying those principles to robots. For example, placing sensors in the robot's joints, similar to how our proprioceptors work, allows for more accurate movement control. This bio-inspired approach is helping robots move more like humans, with smoother, more natural motions.

So, what's the takeaway here? Humanoid robots are getting smarter, faster, and more lifelike, thanks to innovations in sensor placement. But it's not just about slapping more sensors onto a robot—it's about understanding where they should go and how they should work together. As we continue to refine these designs, the line between human and machine will only get blurrier.

In a way, we're seeing history repeat itself. Just as early inventors struggled to understand how to make machines move, today's engineers are grappling with the complexities of humanoid robot design. But with each new innovation, we're getting closer to creating robots that can move, sense, and interact with the world as naturally as we do.