Breaking Reality

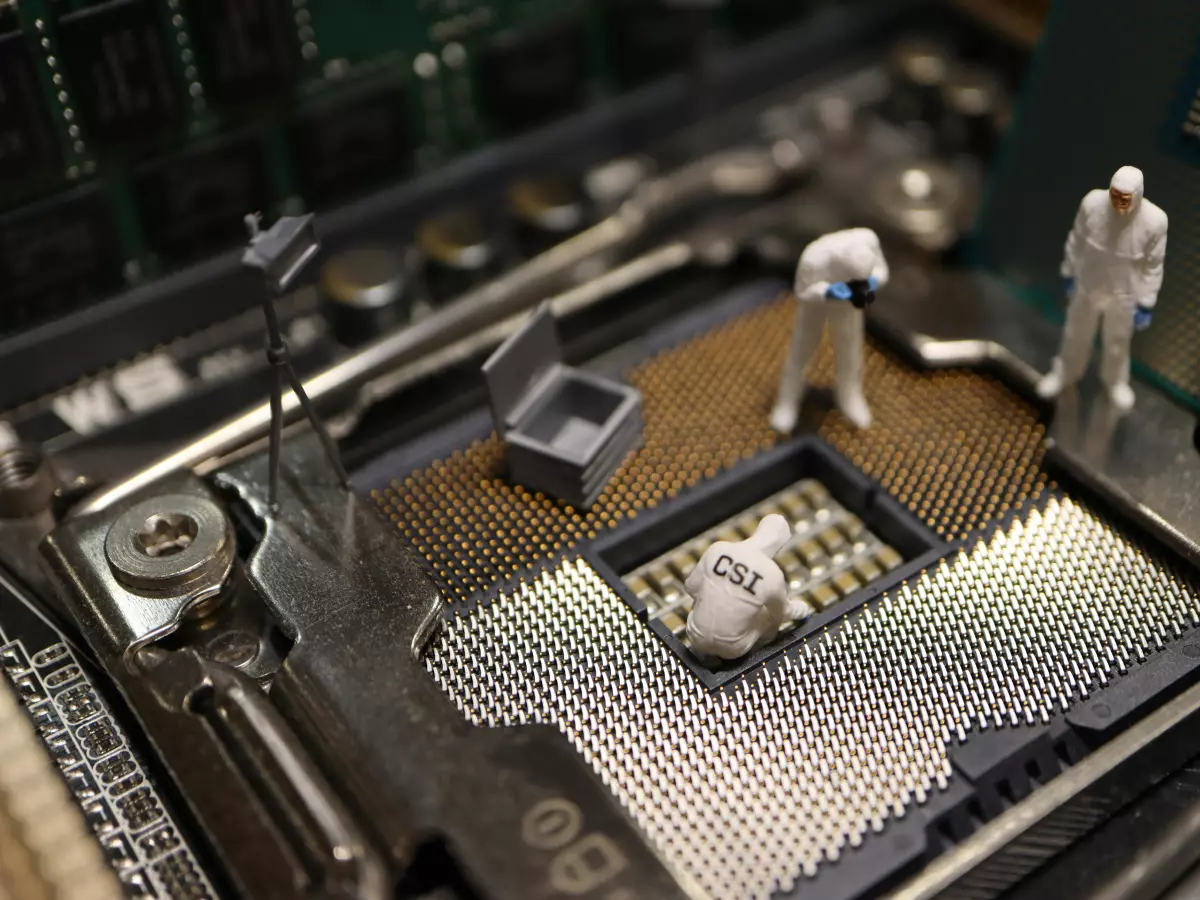

Imagine a world where your boss calls you on Zoom, but it’s not really your boss. Or you receive an email from a colleague, but it wasn’t sent by them. Welcome to the age of cyber clones, where seeing isn’t believing, and reality is up for grabs.

By Isabella Ferraro

It sounds like something straight out of a sci-fi movie, right? But this isn’t the future—it’s happening now. Thanks to advancements in AI and deepfake technology, we’re entering a world where digital doppelgängers can mimic anyone, from your best friend to the CEO of a Fortune 500 company. These cyber clones are so convincing that they can fool even the most vigilant among us.

So, how did we get here? Well, it’s a combination of several factors. First, AI has become incredibly good at learning and replicating human behavior. We’re not just talking about chatbots that can hold a conversation. We’re talking about AI systems that can analyze video, audio, and text to create near-perfect replicas of people. According to ComputerWeekly, these cyber clones are becoming so sophisticated that they can even mimic subtle facial expressions and voice inflections.

But here’s the kicker: these clones are being weaponized. Cybercriminals are using them to launch highly targeted attacks, tricking people into handing over sensitive information or transferring large sums of money. Imagine receiving a video call from your CEO asking you to wire funds for an urgent project. You’d probably do it, right? Well, that’s exactly what some hackers are counting on.

And it’s not just video calls. AI-augmented email analysis is also spotting a rise in fraudulent emails that look eerily real. Multimodal AI systems, which can analyze text, images, and even voice data, are helping enterprise defenders weed out these scams. But the problem is, these systems are still playing catch-up. As fast as they evolve, so do the cybercriminals.

The Trust Crisis

We’re heading into a trust crisis. If you can’t believe what you see or hear, how do you know what’s real? This is the question that businesses and individuals alike are grappling with. The rise of cyber clones is forcing us to rethink how we verify information. It’s no longer enough to rely on traditional methods like email or video calls. We need new ways to authenticate identities, and fast.

Some companies are already experimenting with solutions like blockchain-based identity verification and biometric authentication. But these technologies are still in their infancy. In the meantime, we’re left in a precarious position where anyone could be anyone—or no one at all.

So, what can you do to protect yourself? First, be skeptical. If something feels off, trust your gut. Second, double-check everything. If you get a strange request from a colleague or boss, pick up the phone and call them directly. And finally, stay informed. The more you know about the latest cyber threats, the better equipped you’ll be to spot them.

What’s Next?

The rise of cyber clones is just the beginning. As AI continues to evolve, we can expect these digital doppelgängers to become even more convincing—and more dangerous. But it’s not all doom and gloom. With the right tools and awareness, we can stay one step ahead of the bad guys. The key is to remain vigilant and never take anything at face value.

In the near future, we might see stricter regulations around the use of AI and deepfake technology. Governments and tech companies are already starting to take notice, and there’s a growing push for more transparency and accountability in the development of these tools. But until then, it’s up to us to navigate this new reality with caution.

So, the next time you get a video call from your boss, you might want to think twice before answering. After all, in the age of cyber clones, nothing is quite as it seems.