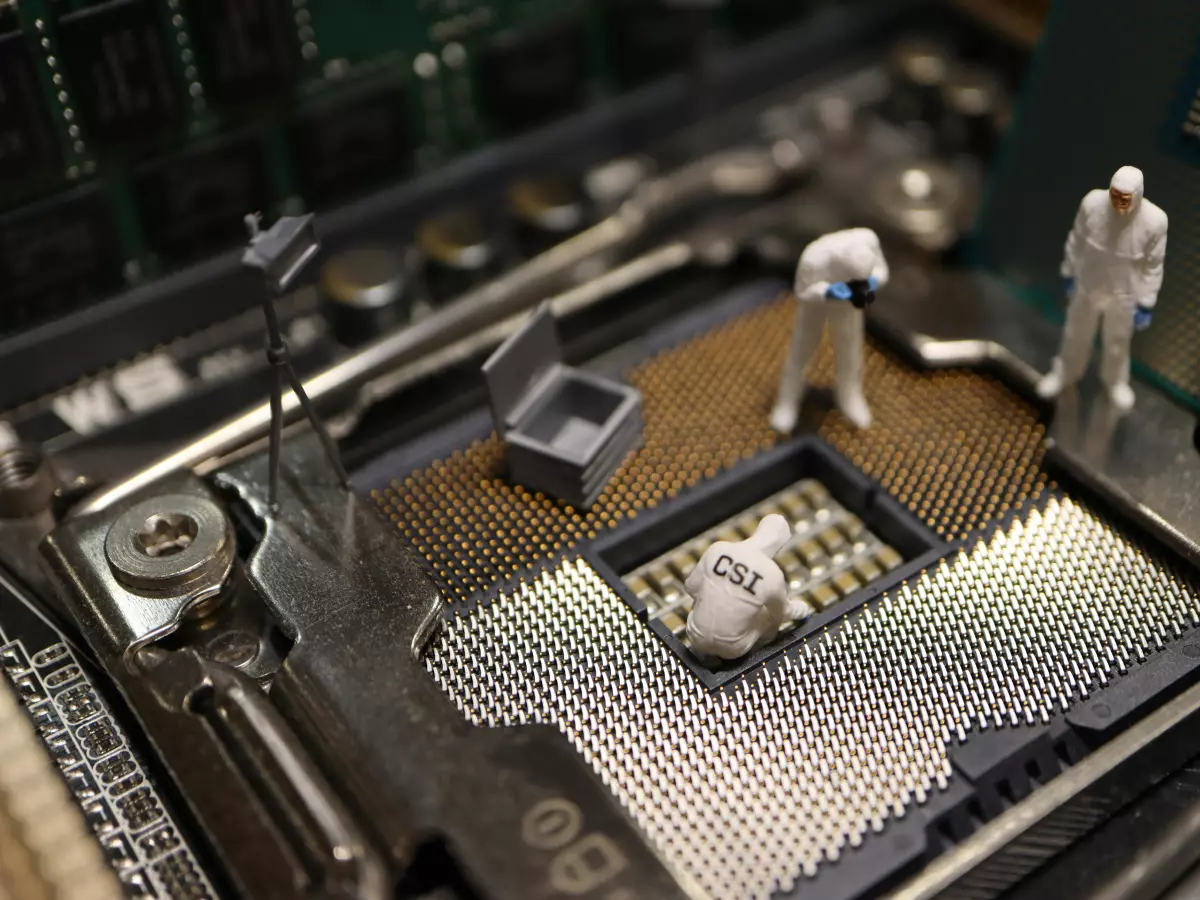

AI's Double-Edged Sword

Nearly 50% of security professionals are skeptical about AI, with many pointing to leaked training data as a major concern, according to a recent HackerOne report.

By Sophia Rossi

Artificial Intelligence (AI) is the talk of the town, right? It’s revolutionizing industries, helping us solve problems faster, and even making our lives more convenient. But here’s the kicker: not everyone is thrilled about it. In fact, a recent survey from HackerOne has revealed that nearly half of security professionals believe AI could be a serious security risk. Yeah, you read that right. The very people who are supposed to keep us safe in the digital world are waving red flags about AI.

So, what’s the deal? Why are these experts so concerned? Well, it turns out that one of the biggest fears is the potential for leaked training data. Imagine this: AI systems are trained on massive amounts of data, and if that data gets into the wrong hands, it could be used to exploit vulnerabilities or even create malicious AI models. Yikes!

Leaked Data: The Achilles Heel of AI?

Let’s break it down. AI models, especially the big, powerful ones, need tons of data to learn and improve. This data often includes sensitive information—think personal details, financial records, or even proprietary business data. Now, if this training data gets leaked or hacked, it’s like handing over the keys to the kingdom. Cybercriminals could potentially reverse-engineer the AI models or use the data to launch targeted attacks. It’s a nightmare scenario for security pros.

And it’s not just theoretical. There have already been instances where AI training data has been compromised. In some cases, this has led to AI models behaving unpredictably or even being weaponized. Yeah, it’s as bad as it sounds.

AI: The Good, The Bad, and The Ugly

But let’s not throw AI under the bus just yet. Despite the concerns, AI also offers incredible benefits in the realm of cybersecurity. For one, AI can help identify and respond to threats faster than any human could. It can analyze patterns, detect anomalies, and even predict potential attacks before they happen. That’s some next-level stuff!

However, the problem is that AI is a double-edged sword. While it can be used to protect systems, it can also be used by the bad guys to launch more sophisticated attacks. It’s like giving both the hero and the villain the same superpower. Who wins? Well, that’s the million-dollar question.

What’s Next? The Future of AI in Security

So, where do we go from here? The truth is, AI isn’t going anywhere. It’s only going to become more integrated into our lives and our security systems. But as we continue to develop and deploy AI technologies, we need to be aware of the risks. Security professionals are calling for more transparency in how AI models are trained and more robust measures to protect training data.

At the end of the day, AI is neither inherently good nor bad. It’s a tool, and like any tool, it can be used for both positive and negative purposes. The key is ensuring that we stay one step ahead of the threats and continue to innovate in ways that prioritize security.

Want to dive deeper into the concerns surrounding AI and security? Check out the full HackerOne report here.