AI Safety vs Innovation

Can AI safety and innovation coexist, or are they on a collision course?

By Elena Petrova

OpenAI is making headlines again, and this time, it's not just about their latest AI models. The company has recently introduced two major developments: the formation of an independent Safety Board and the expansion of its o1 AI models to enterprise and education users. Both moves are significant, but they raise an important question: can AI safety and innovation move forward hand in hand, or are they destined to clash?

On one side, we have the newly formed Safety Board, which has the power to pause AI model rollouts if safety concerns arise. On the other, we have the o1 AI models, which are being hailed for their advanced reasoning capabilities and potential to revolutionize industries. So, which one will have a bigger impact on the future of AI? Let's dive in.

The Safety Board: A Necessary Brake?

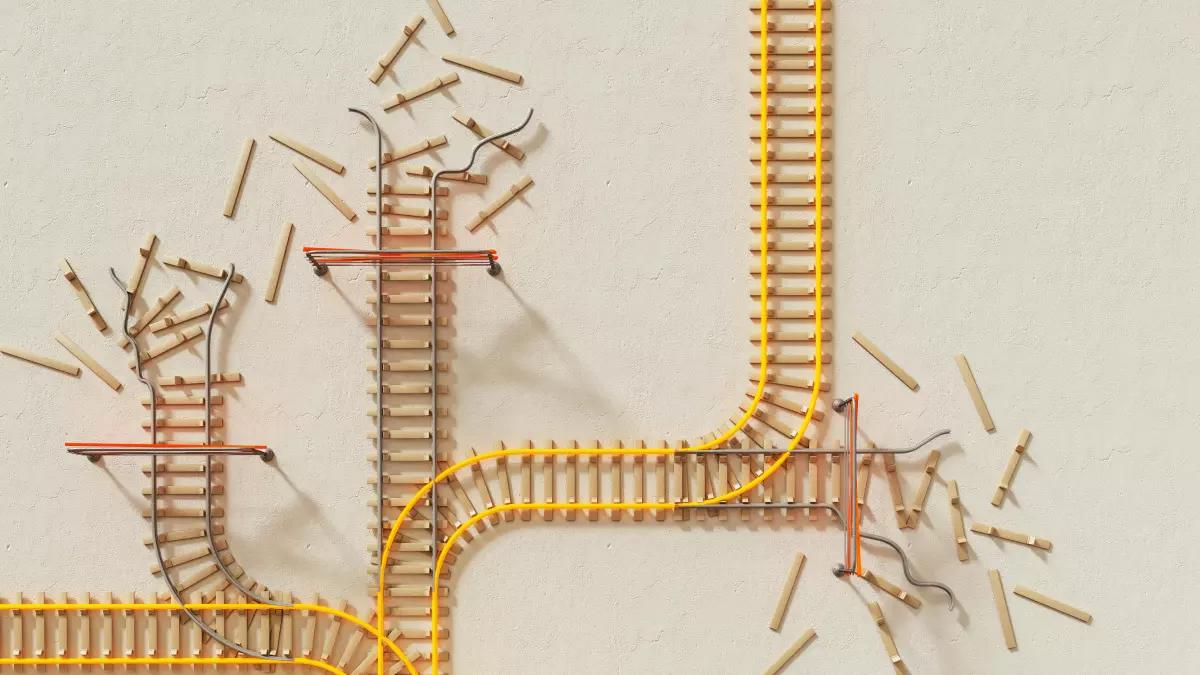

OpenAI's decision to create an independent Safety Board is a bold move. According to PhoneArena, this board will have the authority to halt the release of AI models if they pose a risk to safety. Think of it as the emergency brake on a speeding train—necessary, but will it slow down innovation?

The idea behind the Safety Board is to ensure that AI development doesn't outpace our ability to control it. With AI models becoming increasingly complex and capable, the potential for unintended consequences is growing. The board's role is to assess these risks and make sure that any new AI models are safe for public use. But here's the catch: while this sounds like a great idea in theory, it could also lead to delays in innovation. After all, if every new model has to pass through a safety check, how quickly can we expect progress?

o1 AI Models: The Future of AI?

On the flip side, we have OpenAI's o1 AI models, which are being rolled out to enterprise and education users. These models, according to Gadgets360, are designed to mimic human-like reasoning and handle multi-step tasks. In other words, they're not just spitting out answers—they're thinking. Or at least, they're getting closer to it.

The o1 models are being touted as a game-changer for industries that rely on complex decision-making processes, such as education and enterprise. Imagine AI systems that can help students solve multi-step math problems or assist businesses in making strategic decisions based on nuanced data. The possibilities are endless, but so are the risks. As these models become more integrated into critical sectors, the need for oversight becomes even more pressing.

Safety vs Innovation: Can We Have Both?

So, where does this leave us? On one hand, the Safety Board is a crucial step toward ensuring that AI development doesn't spiral out of control. On the other, the o1 AI models represent the cutting edge of what AI can achieve. The challenge will be finding a balance between these two forces.

It's clear that OpenAI is trying to walk a fine line between innovation and responsibility. The Safety Board is there to make sure that new models don't pose a threat, but it could also slow down the pace of innovation. Meanwhile, the o1 models are pushing the boundaries of what AI can do, but they also raise new safety concerns.

In the end, the question isn't whether we need safety or innovation—it's how we can have both. And that's a question that OpenAI, along with the rest of the tech world, will have to answer in the coming years.

What do you think? Is the Safety Board a necessary precaution, or will it stifle innovation? And are the o1 AI models the future of AI, or do they pose too many risks? Let me know in the comments below!