AI Speed Revolution

"Wait, how fast did that just process?" That's the question buzzing around the room as Tenstorrent's Wormhole chip flexes its AI muscles.

By Jason Patel

Imagine this: You're sitting in front of a sleek workstation, the hum of the machine barely noticeable. You input a complex query into a large language model (LLM), expecting to wait a few seconds for the response. But before you can even blink, the answer is staring back at you, as if the machine anticipated your question. That's the kind of speed Tenstorrent's Wormhole chip is promising, and it's got the AI world buzzing.

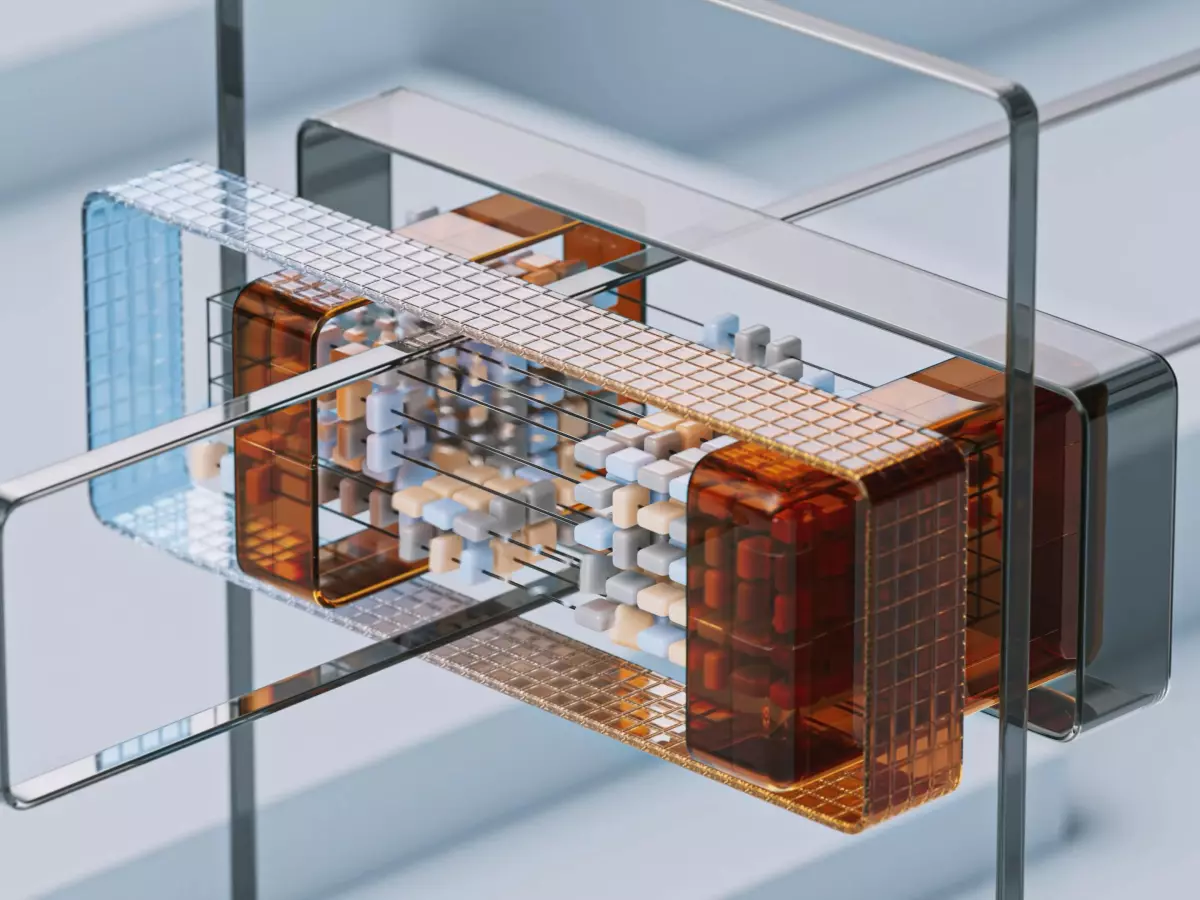

Tenstorrent, an AI chip startup, recently showcased its first-gen Wormhole chip, and it's already turning heads. This isn't just any AI chip; it's specifically designed to handle LLM inference at breakneck speeds. If you're into AI, you know that LLMs (think ChatGPT, GPT-4, and their cousins) are the backbone of modern AI applications, from chatbots to content generation. But here's the kicker: these models are notoriously slow when it comes to inference, especially on standard hardware. Enter Tenstorrent, with its shiny new Wormhole chip, ready to change the game.

Why Speed Matters in AI

Alright, so why should you care about LLM speed? Well, think of it this way: AI models are getting bigger, smarter, and more complex. But with that complexity comes a price—slower processing times. And in a world where we expect instant results (thanks, Google), waiting for an AI model to think isn't just annoying—it's a bottleneck for innovation.

LLM inference is the process of generating responses based on the model's training. The faster this happens, the more seamless the AI experience becomes. Whether you're a developer working on AI-driven applications or a business relying on AI for customer service, speed is everything. And that's where Tenstorrent's Wormhole chip comes in. It's designed to handle these massive models with ease, making AI interactions faster and more efficient.

What Makes Wormhole Special?

So, what's the secret sauce behind Wormhole's speed? According to EE Times, the chip is optimized for single-user LLM inference, meaning it's tailored for individual workstations rather than massive data centers. This focus on single-user performance is a game-changer for AI developers and enthusiasts who want to run powerful models locally without relying on cloud infrastructure.

The Wormhole chip is built with AI in mind from the ground up. It's not just about raw processing power; it's about efficiency. The chip is designed to handle the unique demands of LLMs, which require massive amounts of data to be processed in parallel. Traditional CPUs and GPUs can struggle with this, but Tenstorrent's architecture is built to thrive in this environment.

Think of it like this: if traditional chips are like highways, the Wormhole chip is a high-speed bullet train. It gets you from point A to point B faster, with fewer stops along the way. And in the world of AI, that kind of speed can make all the difference.

Implications for AI Workstations

Now, let's talk about what this means for AI workstations. If you're a developer or researcher working with LLMs, you're probably used to relying on cloud-based solutions for heavy lifting. But with the Wormhole chip, you can bring that power to your local machine. Imagine running a model like GPT-4 on your personal workstation without the lag or the need for a massive server farm. That's the future Tenstorrent is aiming for.

This shift could democratize AI development, making it more accessible to smaller teams and individual developers. No longer will you need access to expensive cloud infrastructure to work with cutting-edge AI models. With the Wormhole chip, the power of AI is literally at your fingertips.

Challenges Ahead

Of course, no new technology comes without its challenges. While the Wormhole chip is impressive, it's still in its early stages. The AI chip market is fiercely competitive, with giants like NVIDIA and AMD dominating the space. Tenstorrent will need to prove that its chip can not only match but exceed the performance of these industry leaders.

There's also the question of scalability. While the Wormhole chip is optimized for single-user workstations, what happens when you need to scale up? Can the chip handle the demands of larger, more complex AI models? These are questions that Tenstorrent will need to answer as it continues to develop its technology.

The Future of AI Processing

Despite these challenges, the future looks bright for Tenstorrent. The Wormhole chip represents a significant step forward in AI processing, and it's exciting to think about where this technology could go. As AI models continue to evolve, the need for faster, more efficient hardware will only grow. And with companies like Tenstorrent pushing the envelope, we might just see a new era of AI workstations that can handle even the most complex models with ease.

So, the next time you're sitting in front of your AI-powered workstation, waiting for a response, just remember: the future of AI speed is already here, and it's only going to get faster.

Time to buckle up.